Originally published: 10 December 2025

This was originally published as this PDF file:

Content

1. Executive summary

As part of the initial decisions of the Research Excellence Framework (REF) 2029, the funding bodies announced their intention to increase focus on the assessment of the conditions that are an essential feature of research excellence through the submission of statements relating to people, research culture and research environment (PCE).

Two interconnected projects developed and tested a range of indicators of research culture for potential consideration in the development of assessment of PCE in REF 2029:

The PCE Indicators Project was commissioned by the four UK higher education funding bodies and was led by Technopolis and CRAC-Vitae in collaboration with a number of sector bodies. The project focussed on developing a list of indicators which could be utilised in the assessment of PCE in the REF. The project team engaged extensively with the research community through workshops and a sector survey to co-develop an assessment framework and a list of indicators to be used to evidence and support institutions’ approaches to PCE.

The PCE Pilot trialled the proposed framework and indicators across a representative range of units of assessment (UoAs) and a range of HEIs, selected to give a range of institutional sizes or unit sizes, a range and breadth of provision, and a range of institutional missions. The Framework, Assessment Criteria, Quality Descriptors and indicators in the guidance for the pilot were all in draft form and provided only as a starting point for the participating HEIs and the assessment panels. These were all expected to be refined during (and following) the pilot. The pilot should be seen as an experiment to explore the possible approaches to assessment of PCE, not as a ‘dry run’ of the near-final framework for the participating HEIs. In particular, it should be noted that any assessment process developed through the pilot will need to be situated in the wider REF assessment as for example, the pilot did not examine assessment of Contribution to Knowledge and Understanding (CKU) or Engagement and Impact (E&I).

During the pilot a selection of HEIs prepared submissions in a sample of UoAs, and also at institution-level. The submissions were assessed by a group of panels composed of members with expertise in research assessment and in PCE generally within academia. The participating HEIs and the assessment panels provided extensive feedback on the development of PCE submissions and on their assessment. The primary objective of the pilot was to assess the assessment process and the primary outcome of the pilot is a realistic assessment of the feasibility of carrying out assessment of PCE in a ‘full-scale’ REF exercise. The feedback from these key stakeholders was key in achieving this aim.

The framework tested in the pilot comprised five factors which enable positive research culture. These were:

- Strategy

- Responsibility

- Connectivity

- Inclusivity

- Development

A number of indicators were identified within each of these enablers, and sources of quantitative and qualitative evidence were suggested which could be used to illustrate performance in PCE. The intention was for the scope of the pilot to be as wide as possible, incorporating a broad range of indicators in order to assess which indicators and evidence sources were most amenable to the assessment of PCE.

A sample of 40 HEIs produced 115 submissions at unit-level across eight UoAs, and 40 institution-level submissions for assessment in the pilot. Participating HEIs took a wide variety of approaches to their submissions, and this was helpful in evaluating what was possible for HEIs to provide and what was useful for the assessment panels.

The submissions were assessed by the pilot panels. 165 panel members were recruited to conduct the assessment across the eight UoAs and at the institution-level. An open approach was taken to recruitment and careful consideration was given to the expertise required for the panels. Panels needed to include not just academic expertise, but also specialists who are well-placed for the assessment of PCE. Panels conducted their assessment through a series of in-person and virtual meetings, evaluating each of the submissions against the draft criteria and quality descriptors to arrive at a score for each of the enabling factors for each submission.

No formal calibration was conducted within or between the assessment panels and HEIs took a wide variety of approaches in their submissions. Therefore, direct comparisons in the pilot of one institution to another, or of one UoA to another would not be robust. The assessment of PCE in REF 2029 will be different from the assessment conducted in the pilot, therefore results of the pilot should not be interpreted as an indication of expected performance in REF 2029. Overall, scores across the pilot were lower than those for Environment assessment in REF 2021; this reflects the exploratory nature of the submissions and assessment processes being evaluated in the pilot.

Participating HEIs and assessment panels gave feedback on the approach tested in the pilot. This feedback allowed the development of conclusions about the assessment of PCE, though development of the piloted approach would be necessary before it could be applied in the REF 2029 assessment.

Feasibility of PCE assessment

The pilot concluded that it would be possible to define and assess excellence in PCE, but that the approach utilised in the pilot would need to be adapted for a full-scale REF assessment. Panels were confident that evidence provided was sufficient to build an approach that would reach robust judgements. Lower overall scores in the pilot reflect the exploratory nature of the exercise. HEIs were encouraged to experiment with their submissions and therefore a lower average score should not be unexpected. Variation in scores between enablers or between UoAs should be attributed to different approaches taken by the assessment panels and should not be considered as differences in the quality of PCE approaches between different subject areas. There was a clear trend with larger institutions scoring higher on average than smaller institutions. Despite the panels’ efforts to be as inclusive as possible in their approach to the assessment, evidence was often lacking for smaller institutions and units, the panels were sometimes less confident in awarding higher scores, however differences could also be attributed to different approaches taken by the participating HEIs in developing their submissions and should not be interpreted as differences in the quality of the PCE operating at smaller institutions.

Simplification of the framework and rationalisation of the indicators

The piloted framework was largely fit for purpose, but the list of indicators tested was more than necessary to reach a robust judgement. For the pilot, a wide range of examples of quantitative and qualitative evidence and contextual information were suggested, and HEIs were also encouraged to provide any evidence they felt appropriate to represent their performance in PCE. The aim of the pilot was to test these potential indicators with the expectation of focussing on a tighter set for the full-scale assessment. Careful consideration was given to the rationalisation of the list of indicators and evidence. A list of indicators is provided where there was reasonable agreement that they may be useful for assessment in REF 2029.

Any evidence or data required for the assessment should be widely available; the assessment should not require the retrospective collection of data or collection of new types of data. Consideration should be given to the automatic collection of data (for example through HESA).

Indicators which are not suggested for consideration in the assessment framework for REF 2029 are still deemed important. They should not be discounted, and due consideration should be given to how they may be developed for inclusion in future exercises.

The assessment framework should be simplified wherever possible before being utilised in a full-scale exercise, for example the five enabling factors outlined above could be combined or rationalised to produce a more streamlined framework. The pilot tested three assessment criteria (Vitality, Sustainability and Rigour); the criterion of Rigour was not considered to be required in addition to the criteria of Vitality and Sustainability (which were used previously for the assessment of REF Environment), though the need for institutions to reflect on their practice was an important part of the assessment.

Institution-level and unit-level assessment and support for diversity of research approaches/missions/operating models

Recognition of operating context is critical to the assessment of PCE. PCE assessment in the REF should be supportive of the wide range of approaches, strategies and missions in the sector. Not all HEIs are operating with the same resources, and they are at different stages in their ‘PCE journeys’, this needs to be recognised and accounted for in the assessment. The highest scores should be achievable by any HEI working efficiently in focussed areas which have been targeted for strategic reasons.

Assessment at institution-level and unit-level are both important to capture differences between support for different units, such as differing performance between units in an institution, or if the performance of the unit is out-of-step with that of the institution. The pilot suggested that institution-level and unit-level templates need to be integrated, and that institution-level statements should articulate policies and the strategic need for them, and unit-level statements should explain how policies are delivered and the impacts of those policies.

Overall conclusions

The pilot aimed to inform the development of robust, inclusive and meaningful approaches to the assessment of research culture. Research environment, including all people delivering research, provides the underpinning basis of excellence in the research system.

The PCE pilot has been a valuable reflective exercise. The experimental nature of the pilot has allowed us to observe both the opportunities for the future assessment of research, as well as the legitimate concerns about burden, clarity, and fairness. Participating institutions and assessment panels appreciated the opportunity to engage with research culture more deeply. Evidence provided points strongly towards a great deal of excellent practice which is already happening within the sector. However, the need for clearer guidance, streamlined processes and frameworks for a full-scale exercise was emphasised.

2. Introduction

The Research Excellence Framework (REF) is the UK’s system for assessing the excellence of research in UK higher education institutions (HEIs). It first took place in 2014 and was conducted again in 2021. The next exercise is planned to complete in 2029.

The REF outcomes are used to inform the allocation of around £2 billion per year of public funding for universities’ research. The REF is a process of expert review, carried out by sub-panels focussed on subject-based units of assessment (UoAs), under the guidance of overarching main panels and advisory panels.

The purpose of the REF is to:

- inform the allocation of block-grant research funding to HEIs based on research quality

- provide accountability for public investment in research and produce evidence of the benefits of this investment

- provide insights into the health of research in HEIs in the UK

Development between exercises is necessary to reflect the changing research landscape. Therefore, the four UK higher education funding bodies launched the Future Research Assessment Programme (FRAP) to inform the development of the next REF. The funding bodies published initial decisions on high-level design of the next REF in June 2023.

Redesigning the UK’s national research assessment exercise offers an opportunity to reshape the incentives within the research system and rethink what should be recognised and rewarded. Changes for REF 2029 include an expansion of the definition of research excellence to ensure appropriate recognition is given to the people, culture and environments that underpin a vibrant and sustainable UK research system.

The three elements of the assessment have been renamed for REF 2029 and their content adjusted to reflect this. The weightings between the three elements will also be rebalanced.

- People, Culture and Environment (PCE)

- Contribution to Knowledge and Understanding (CKU)

- Engagement and Impact (E&I)

Changes to the three assessment elements used will allow REF 2029 to recognise and reward a broader range of research outputs, activities and impacts and reward those institutions that strive to create a positive research culture and nurture their research and research-enabling staff.

The PCE element replaces the environment element of REF 2014 and 2021 and will be expanded to further support the assessment of research culture. Evidence to inform assessment of this element will be collected at both institution-level and at unit-level.

The consultation on the initial decisions, published in the summer of 2023, probed the community’s thinking on most of the major decisions. In addition, feedback was specifically sought on the PCE element of the assessment.

The PCE Indicators Project

A project led by Technopolis and CRAC-Vitae in collaboration with a number of sector organisations was commissioned by the four UK higher education funding bodies. The project was focussed on developing a list of indicators which could be utilised in the assessment of PCE in the REF. The project team engaged extensively with the research community through workshops and a sector survey to co-develop an assessment framework and a list of indicators to be used to evidence and support institutions’ PCE submissions.

The PCE Pilot

The REF team developed and delivered the PCE Pilot to trial the assessment of PCE within REF. The framework and indicators developed by the PCE Indicators Project were developed further in consultation with the REF Steering Group for use in the pilot. The intention was for the scope of the pilot to be as wide as possible, incorporating as broad a range of indicators as possible in order to assess which indicators and evidence sources were most amenable to the assessment of PCE.

The Framework, Assessment Criteria, Quality Descriptors and indicators in the guidance for the pilot were provided in draft form as a starting point for the participating HEIs and the assessment panels. These were all expected to be refined during the pilot. The pilot should be seen as an experiment to explore the possible approaches to assessment of PCE, not as a ‘dry run’ or the near-final framework for the participating HEIs.

A selection of HEIs prepared submissions in a sample of UoAs, and also at institution-level. The submissions were assessed by a group of panels composed of members with expertise in research assessment, and in PCE generally within academia. This assessment generated scores for the submissions prepared by the participating HEIs, however, the primary objective of the pilot was to assess the assessment process and the primary outcome of the exercise is a realistic assessment of the feasibility of carrying out assessment of PCE in a ‘full-scale’ REF exercise.

The pilot focussed on developing the assessment process for PCE; however, any assessment process developed through the Indicators Project and the pilot will need to be situated in the wider REF assessment as for example, the pilot did not examine statements on Contribution to Knowledge and Understanding (CKU) or Engagement and Impact (E&I). The pilot also focussed on the indicators of excellent research culture highlighted in the PCE Indicators Project and therefore did not explicitly include income, infrastructure and facilities (as previously included under REF Environment) though it is anticipated that these will continue to be an important part of the REF Environment assessment and details will be provided as part of the wider REF 2029 guidance.

A timeline of the PCE work is available on the REF website, outlining at a high-level the key milestones of the PCE Indicators Project, and the PCE Pilot.

3. Methodology

- The pilot tested the framework and list of indicators developed by the PCE Indicators Project. The framework comprised five factors which enable positive research culture:

- Strategy

- Responsibility

- Connectivity

- Inclusivity

- Development

- A number of indicators were identified within each of these enablers, and sources of quantitative and qualitative evidence were suggested which could be used to illustrate performance in PCE. The intention was for the scope of the pilot to be as wide as possible, incorporating a broad range of indicators in order to assess which indicators and evidence sources were most amenable to the assessment of PCE.

- Units of assessment (UoAs) were selected for the pilot based on, providing interesting areas to explore such as representation of specialist institutions, practice-based research, non-traditional career paths, etc.. UoAs were also selected to be representative of the broader research landscape.

- A sample of 40 HEIs was recruited to produce submissions for assessment in the pilot. Participating institutions were selected to give a range of institutional sizes or unit sizes, and a range of breadth of provision, and a range of institutional missions.

- The HEIs prepared submissions incorporating evidence and narrative outlining their performance against a framework of five factors which enable positive research culture and ensure a well-supported research community.

- Participating HEIs took a wide variety of approaches to their submissions and this was helpful in evaluating what was possible for HEIs to provide, and what was useful for the assessment panels.

- In order to conduct the assessment, eight unit-level panels and an institution-level panel were recruited. An open approach was taken to recruitment and panels included not just academic expertise, but also specialists who were well-placed for the assessment of PCE.

- Assessment of the submissions was conducted by the assessment panels. The panels assessed submissions to produce scores, and provided feedback on the assessment process.

The framework and list of indicators developed by the PCE Indicators Project was developed further in consultation with the REF Steering Group for use in the pilot. It was necessary to focus the pilot in order to have a balance between a manageable amount of work to be delivered and providing sufficient evidence for a meaningful assessment of pilot indicators and approach to assessment. A key concern of the pilot was that the sample of material produced and outcomes of the assessment could be considered representative of the broader research landscape.

UoA selection

As in REF 2021, submissions in REF 2029 will be made to 34 UoAs. The resources required to run assessment of PCE across all of these UoAs would be excessive for the purposes of a pilot; therefore, the decision was taken to examine assessment in two UoAs from each main panel group (eight in total) and also at institution-level. This was intended to give a spread of different panels and disciplines, but also manage the effort being put into a pilot.

A number of criteria were considered when selecting UoAs.

- Consideration was given to which would be interesting UoAs to explore, meaning those with particular issues or conditions with PCE or with possible indicators. For example, this included ensuring there was representation of specialist institutions, practice-based research, non-traditional career paths, outputs, etc..

- Consideration was given to ensuring that there is sufficient material to assess. Therefore, UoAs were selected which had a reasonably large number of submissions or a reasonably high volume of FTE submitted to REF 2021.

- UoAs were selected which could be considered representative of the broader research landscape. Our expectation was that there would be some ‘read-across’ from piloted UoAs to infer similar outcomes and conclusions for the UoAs not included in the pilot.

The REF team liaised with the four REF 2021 main panel chairs. Advice was sought on which UoAs should be included in the PCE Pilot. The selected UoAs are outlined in Table 1, they provide a representation of each of the main panels, and they represent more than 25% of the main panel group in terms of total FTE and in terms of number of submissions made to REF 2021.

In the table, the percentage of MP FTE column shows what percentage of the total Main Panel FTE is represented by the UoAs selected for the pilot. It could be interpreted as how representative of the Main Panel the selected UoAs are. The percentage of MP subs column shows what percentage of the total Main Panel submissions is represented by the UoAs selected for the pilot. It could be interpreted as how representative of the Main Panel the selected UoAs are.

| UoAs | FTE | % of MP FTE | Number of subs | % of MP subs |

|---|---|---|---|---|

| 3 | 4,768.73 | 24 | 91 | 29 |

| 5 | 2,866.69 | 14 | 44 | 14 |

| Main Panel A total | 7,635.42 | 38 | 135 | 43 |

| 7 | 1,781.77 | 10 | 40 | 11 |

| 11 | 3,002.21 | 16 | 90 | 25 |

| Main Panel B total | 4,783.98 | 26 | 130 | 36 |

| 17 | 6,633.52 | 28 | 108 | 16 |

| 20 | 2,105.24 | 9 | 76 | 12 |

| Main Panel C total | 8,738.76 | 37 | 184 | 28 |

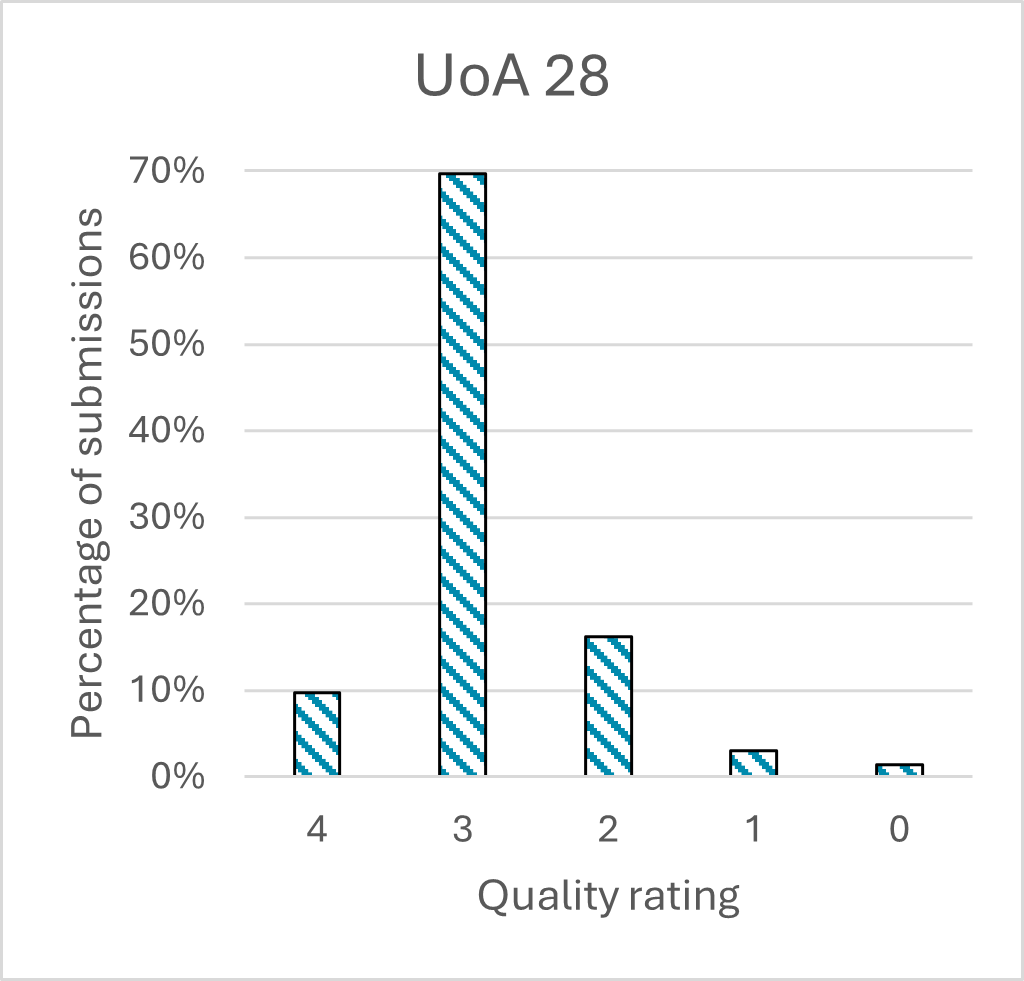

| 28 | 2,360.21 | 16 | 81 | 15 |

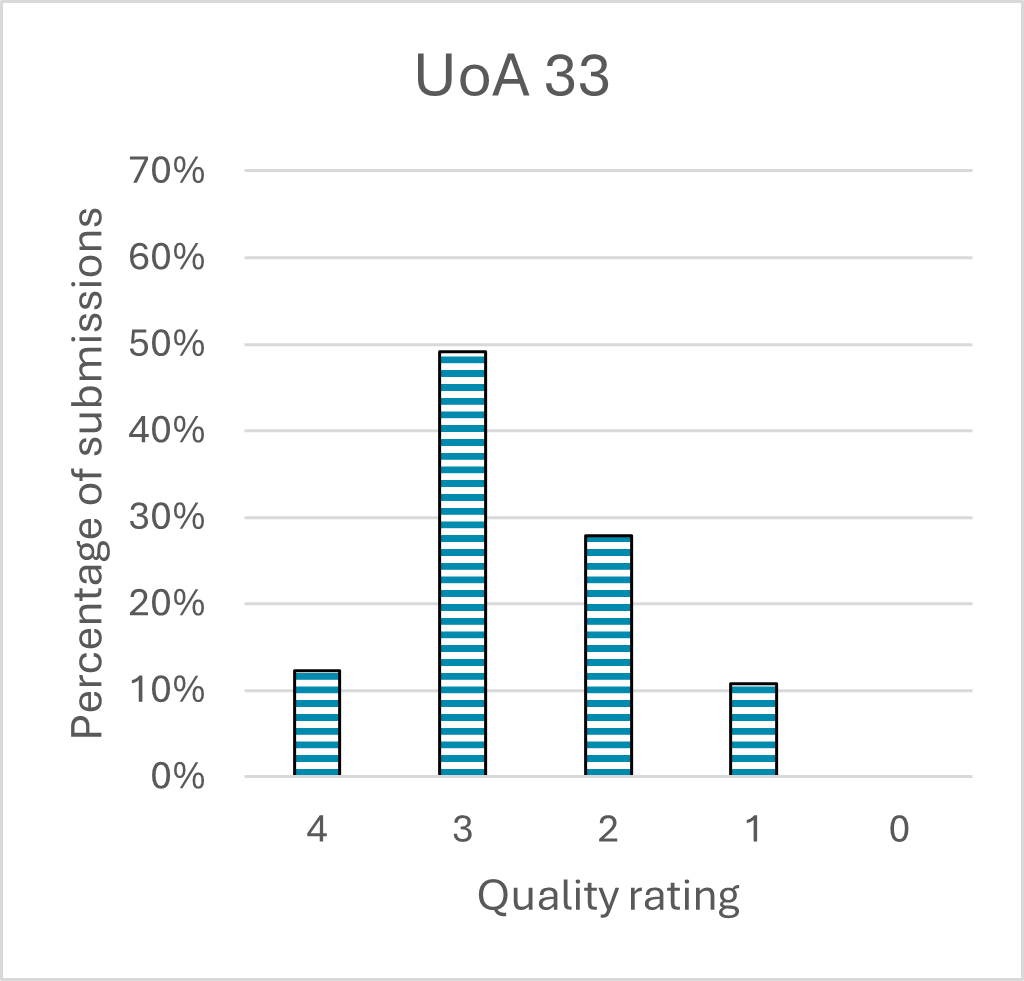

| 33 | 1,523.27 | 11 | 84 | 15 |

| Main Panel D total | 3,883.48 | 27 | 165 | 30 |

HEI selection

HEIs were recruited to produce PCE submissions for assessment in the pilot. 157 institutions participated in REF 2021, however it would not be feasible for all of these institutions to participate in the pilot, so it was decided to gather a sample of 40 institutions in order to provide a manageable workload for the pilot and allow for a diversity of institution type and institutional mission to yield robust results which could be applied across the sector.

HEIs were invited to apply for participation in the pilot through an open process. This was intended to give the broadest possible opportunities for institutions to participate. On application institutions were asked to indicate for which UoAs they would be able to prepare submissions. Expressions of interest were received from 85 HEIs wishing to participate in the pilot. Participating institutions were selected to give a range of institution sizes or unit sizes, and a range of breadth of provision (meaning some large and multi-faculty institutions, some small and specialist institutions and a variety of intermediate type institutions), and a range of institutional missions (e.g. research intensive or teaching focussed).

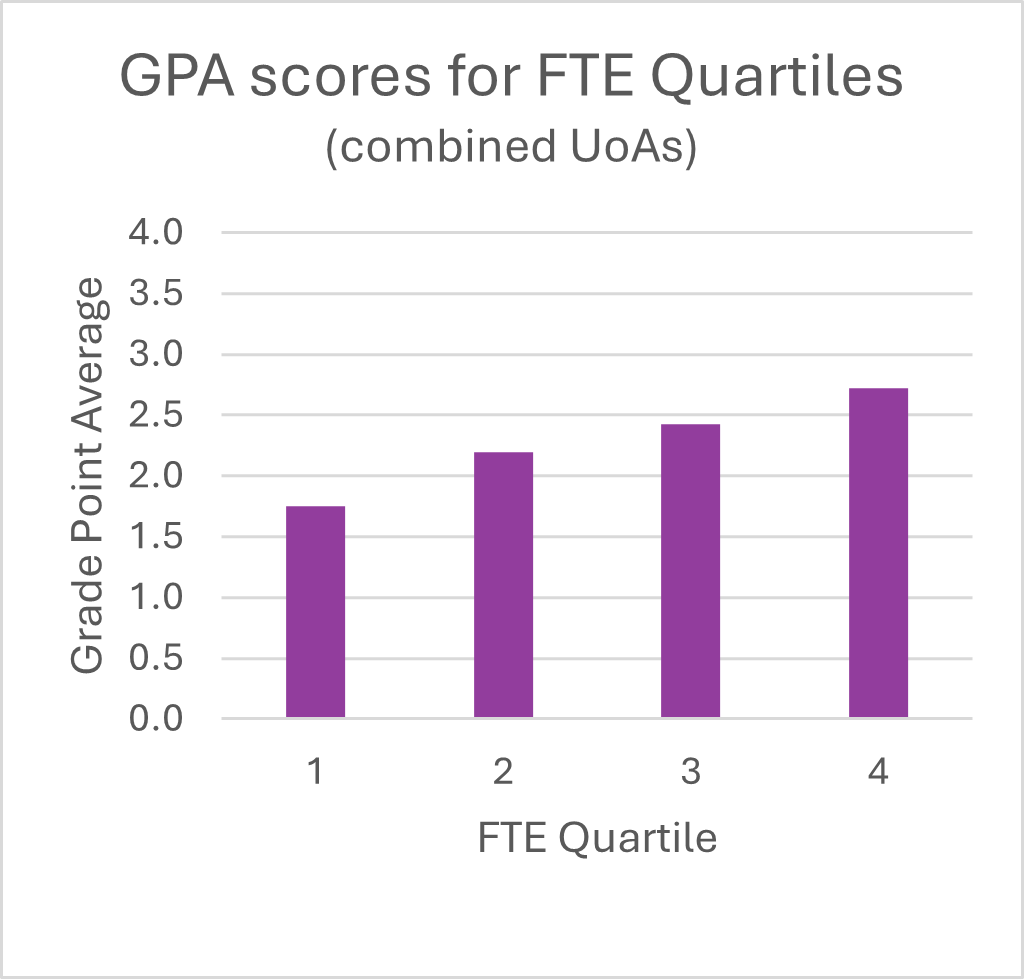

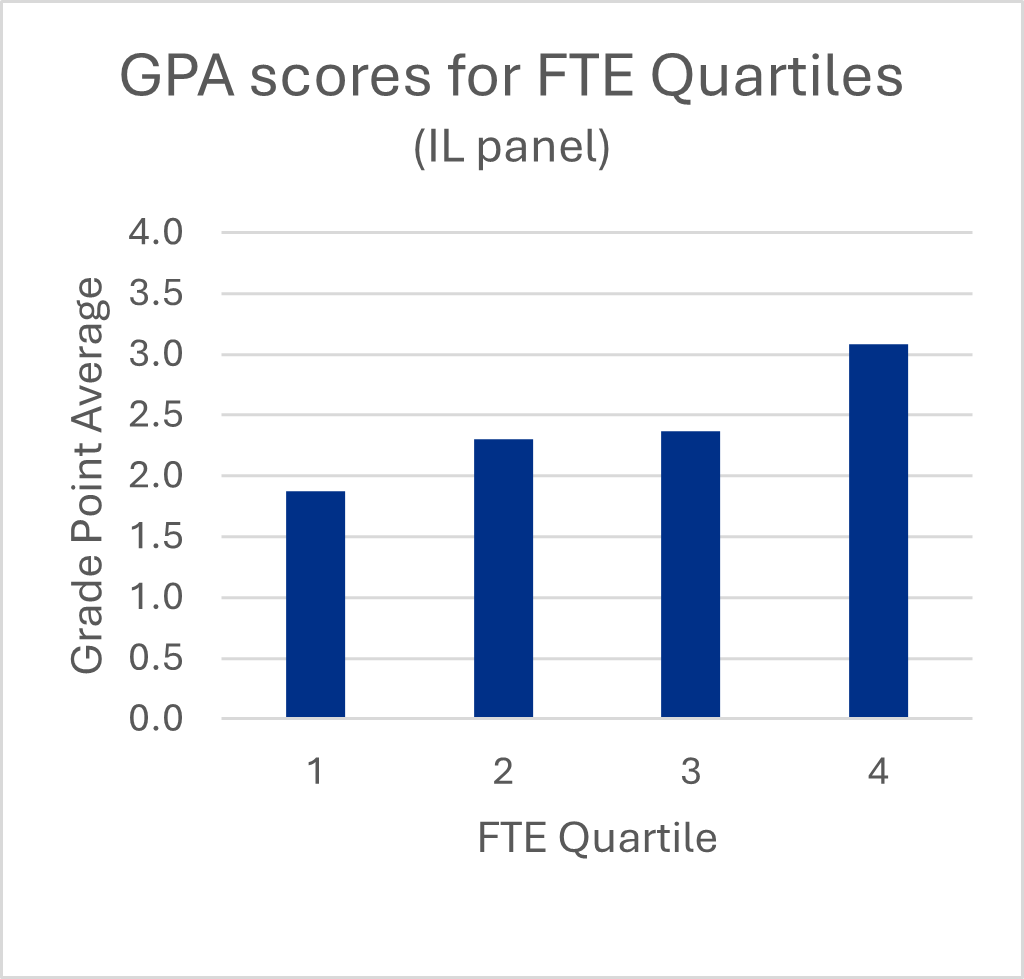

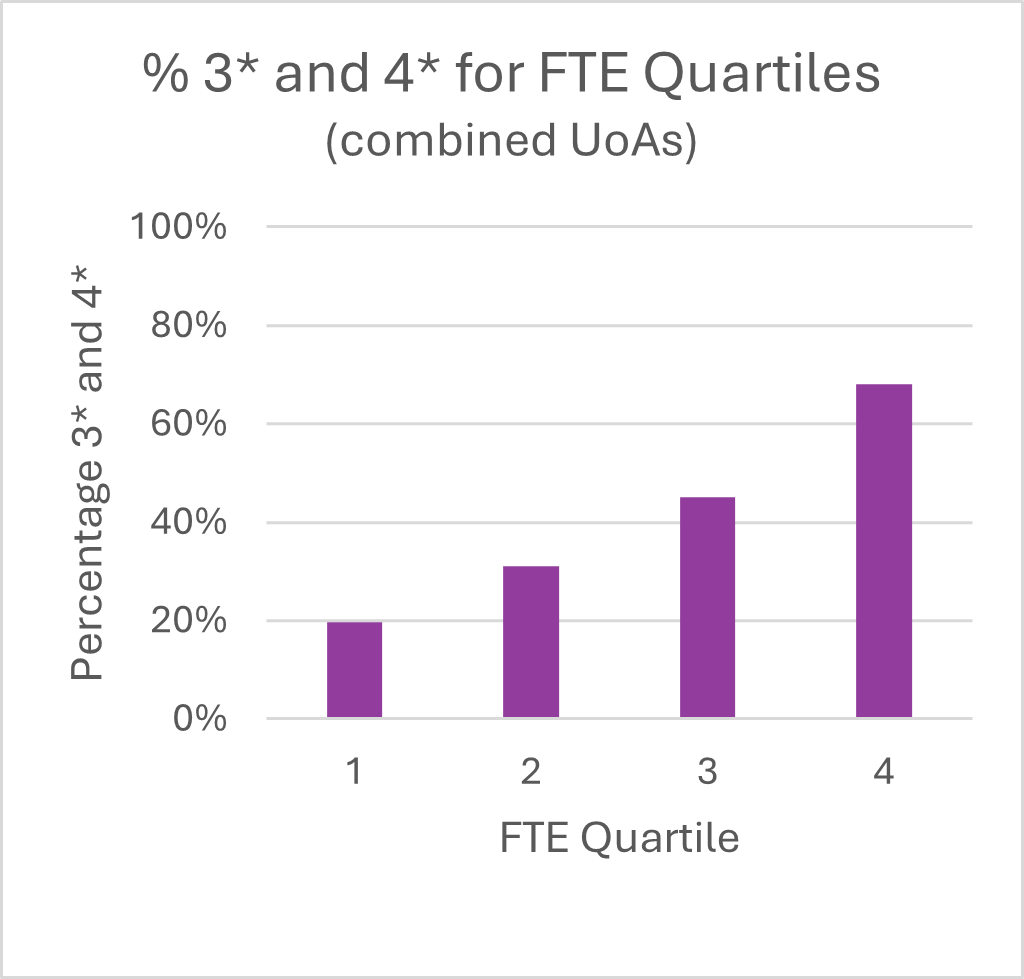

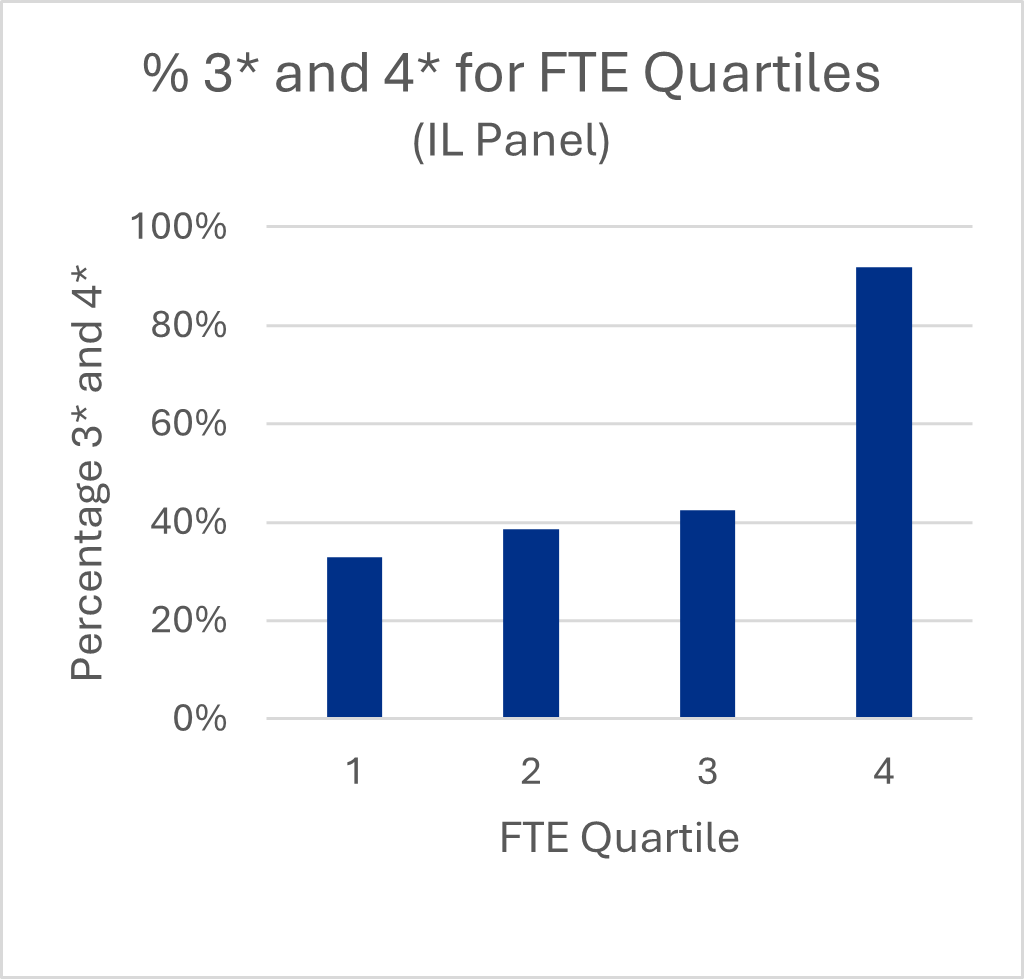

The criteria for selection were largely informed by data on submissions made to the REF 2021 exercise drawing on the size (FTE) of REF submissions to the REF 2021 exercise as a workable proxy for research intensity. All of the institutions which participated in REF 2021 were listed in order of the total FTE they submitted to the exercise and the list was divided into four equal quartiles. A random selection was made from the institutions which applied to produce an even mixture of small to large institutions (in terms of their REF submission size). The sample was then examined for spread of submissions across UoAs and for balance of UK nations and regions.

A table of participating institutions and the UoAs they prepared submissions for is provided in appendix A. In total 115 submissions were produced at unit-level (across the eight UoAs) and 40 institution-level submissions were produced. Opportunities for other institutions to be informed included sharing of the Pilot Guidance with the wider sector, a sector round table in February 2025 and online drop-in sessions in March 2025. A number of blogs were also published during the pilot to inform the wider sector of progress with the project:

- People, Culture and Environment in REF 2029: join the journey

- REF2029: People, Culture and Environment in Small Specialist Institutions

- Placing research integrity at the heart of REF

- People, Culture and Environment in REF 2029: the journey continues

Panel member selection

Submissions to each UoA in the pilot were reviewed by assessment panels. In total 165 panel members were recruited to conduct the assessment. In each UoA a unit-level panel was comprised of approximately 18 panel members and two co-chairs. An institution-level panel was composed of a chair, with membership comprising the co-chairs of the unit-level panel and some additional members bringing in specific expertise for the assessment of PCE.

To afford the widest possible opportunities for involvement, an open approach was taken to recruitment. Careful consideration was given to the expertise required for the panels. Panels needed to include not just academic expertise, but also specialists who are well-placed for the assessment of PCE.

In the application form prospective panel members were asked to briefly state what experience they would bring to the role, and specifically they were asked to outline their:

- experience of research assessment, for example, where they have served on a REF panel or other national assessment exercise

- experience of research submissions, for example, where they have coordinated an institution- or unit-level submission for the REF 2021 exercise

- general expertise in PCE, for example, where they have an institutional role focussing on a field related to research culture, or are involved in other groups or organisations with an active interest in PCE

The first stage of appointing the PCE pilot panels was selection of the co-chairs for each of the pilot UoAs. Applicants were asked to indicate if they wanted to be considered for a co-chair role when they applied. Members of the REF Steering Group, representing the four UK higher education funding bodies, and REF team reviewed the applications for the co-chair roles and considered the evidence presented against the above criteria. Individuals were not expected to demonstrate all of the criteria, but an even balance between the co-chairs of each panel was considered.

Once the co-chairs were appointed, a similar process was applied for co-chairs to select their panels. Co-chairs reviewed the applications for members of their panels and considered the evidence presented against each criterion; again individual panel members did not need to demonstrate all of the criteria, but consideration was given to the overall balance of the panel’s expertise. Co-chairs also considered the spread of subject expertise within the panel, such as representation of sub-disciplines within the UoA, and also the representation of institutional type within the assessment panels. A table of panel membership can be found on the REF website.

Submission phase

The participating institutions prepared submissions to the pilot incorporating evidence and narrative outlining their performance against a framework of five factors which enable positive research culture and ensure a well-supported research community. These five factors were developed following consultation with the sector in the PCE Indicators Project and were refined by the REF Steering Group. The five enabling factors are outlined below.

Enabling factors of positive research culture

- Strategy

Having robust, effective and meaningful plans to manage and enhance the vitality and sustainability of the research culture and environment - Responsibility

Upholding the highest standards of research integrity and ethics, enabling transparency and accountability in all aspects of research - Connectivity

Enabling inter-disciplinary and cross-disciplinary approaches both within and between institutions, fostering co-creation and engagement with research users and society, and recognising and supporting open research practices - Inclusivity

Ensuring the research environment is accessible, inclusive, and collegial. Enabling equity for under-represented and minoritised groups - Development

Recognising and valuing the breadth of activities, practices and roles involved in research, building and sustaining relevant and accessible career pathways for all staff and research students, providing effective support and people-centred line management and supervision, supporting porosity and embedding professional and career development at all levels and across all roles

A number of indicators were identified within each of these enablers, and sources of quantitative and qualitative evidence were suggested which could be used by HEIs to illustrate performance against each indicator. For the purposes of the pilot, HEIs were asked to return evidence and produce contextual elements supporting their performance across all indicators in the framework and to provide as much evidence as they were able to. In addition, if participating HEIs felt that their performance in PCE was better contextualised by other indicators then they were afforded the flexibility to include them. The intention was for the scope of the pilot to be as wide as possible, incorporating as broad a range of indicators as possible in order to assess which indicators and evidence sources were lowest burden to report and most amenable to the assessment of PCE. Feedback on the submissions and assessment from the pilot would provide the basis for developing a focussed and robust assessment of PCE for a full-scale REF exercise. Guidance for the pilot, including the framework and the assessment template, and suggested indicators is available on the REF website.

Participating institutions took a wide variety of approaches to their submissions and this was helpful in evaluating what was possible for the participating institutions to provide, and what was useful for the assessment panels.

Participating HEIs were given an introduction to the PCE Pilot Guidance before developing their submissions. Throughout the submission phase, January to March 2025, HEIs were supported by regular weekly check-in meetings with the REF team. These discussions were focussed on sharing early feedback on the development of PCE submissions, and on discussing and sharing approaches between the participating HEIs.

Assessment

Assessment of the submissions was conducted by the assessment panels. The responsibilities of the assessment panels were to:

- assess submissions made to the PCE pilot, including by reading and scoring submissions and reaching agreed outcomes in meetings with the unit-level and/or institution-level panel

- provide feedback on the assessment process by participating in workshop meetings to help evaluate the assessment processes trialled in the pilot

Panels met through a series of in-person and virtual meetings during this phase of the pilot from April to July 2025. They evaluated each of the submissions, assessing against the draft criteria outlined below. Criteria were expected to be refined during the pilot, and the draft definitions were provided only as a starting point.

Pilot assessment criteria

- Vitality

Vitality will be understood as the extent to which the institution fosters a thriving and inclusive research culture for all staff and research students. This includes the presence of a

clearly articulated strategy for empowering individuals to succeed and engage in the highest quality research outcomes. - Sustainability

Sustainability will be understood as the extent to which the research environment ensures the health, diversity, wellbeing and wider contribution of the unit and the discipline(s), including investment in people and in infrastructure, effective and responsible use of resources, and the ability to adapt to evolving needs and challenges. - Rigour

Rigour will be understood as the extent to which the institution has robust, effective, and meaningful mechanisms and processes for supporting the highest quality research outcomes,

and empowering all staff and research students. This includes the sharing of good practices and learning, embracing innovation, robust evaluation and honest reflection demonstrating a willingness to learn from experiences.

Final criteria for REF 2029 will be developed by the REF sub-panels. This will incorporate outcomes of the PCE pilot and also take into account other elements of the research environment (such as income, infrastructure and facilities).

Panels arrived at scores for each of the enabling factors in the assessment framework (Strategy, Responsibility, Connectivity, Inclusivity and Development). Scores were based on the working definitions of quality descriptors outlined below. These descriptors were intended to be refined during the pilot. Final descriptors will be developed by the REF sub-panels and will take into account other elements of the research environment (such as income, infrastructure and facilities).

- 4 star: provides robust evidence of a culture and environment conducive to producing research of world-leading quality and enabling outstanding engagement and impact, in terms of their Vitality, Sustainability, and Rigour. There is evidence that the policies and measures in place at the institution are having a positive impact on PCE within the institution, and furthermore collaboration and sharing of good practice and learning mean that there is also influence outside the institution.

- 3 star: provides robust evidence of a culture and environment conducive to producing research of internationally excellent quality and enabling very considerable engagement and impact, in

terms of their Vitality, Sustainability, and Rigour. There is evidence that the policies and measures in place at the institution are having a positive impact on PCE within the institution. - 2 star: provides robust evidence of a culture and environment conducive to producing research of internationally recognised quality and enabling considerable engagement and impact, in terms of their Vitality, Sustainability, and Rigour. There is evidence that the policies and measures in place to positively influence PCE at the institution are being adhered to.

- 1 star: provides robust evidence of a culture and environment conducive to producing research of nationally recognised quality and enabling recognised but modest engagement and impact, in terms of their Vitality, Sustainability, and Rigour. There is evidence that policies and measures are in place which are intended to have a positive impact on PCE at the institution.

- Unclassified/0: evidence provided is not robust, or evidence suggests a culture and environment conducive to producing research falling below nationally recognised standards.

Training was conducted in February 2025, with each of the panels discussing the pilot framework and their own working methods for assessment. Training on EDI considerations such as unconscious bias training was also provided by the REF People and Diversity Advisory Panel (PDAP).

Across the pilot, panels adopted structured and collaborative approaches to the assessment to ensure consistency and fairness. The first and last meetings were in-person, with the meetings in-between being held online. At each meeting a range of submissions was assessed and scored, and panels reflected on the assessment process. At the final meetings scores and feedback were finalised.

Submissions were typically reviewed in smaller subgroups to manage workload within the timeframe. The scores were submitted prior to the meeting and consensus discussions used to moderate scoring. Panels typically carried out some preliminary discussions at their first meeting to discuss scoring and to develop an understanding of the quality descriptors and assessment criteria. Continuing discussions in subsequent meetings helped align scoring and reduce variability. While institutional statements were often read by the unit-level panels for context, emphasis was placed on evaluating embedded evidence within the narrative rather than relying on corroborating sources.

4. Results and analysis

- Participating HEIs and assessment panels gave feedback on the approach tested in the pilot. This feedback allowed the development of conclusions about the assessment of PCE, though development of the piloted approach would be necessary before it could be applied in the REF 2029 assessment.

- It is important to strike a balance between institution-level and unit-level assessment. Unit- and institution-level statements should be integrated. Institution-level statements should clearly articulate the policies and the strategic need for them; unit-level statements should then explain how these policies are delivered and the impacts of those policies.

- Operating context is critical to the assessment of PCE. PCE assessment in the REF should be supportive of the wide range of approaches, strategies and missions in the sector. Not all HEIs are operating with the same resources and they are at different stages in their ‘PCE journeys’, this needs to be recognised and accounted for in the assessment.

Framework

- The framework of enabling factors was largely fit for purpose, but could be simplified by combining some of the indicators.

- Consideration should be given to how the PCE assessment is incorporated into the Environment assessment from previous REF exercises.

- The framework tested could be simplified, for example Responsibility and Connectivity, and/or Inclusivity and Development could be combined.

Assessment Criteria

- The assessment criteria of Vitality, Rigour and Sustainability were considered as starting points in the pilot assessment, the criteria were expected to be developed as the assessment progressed.

- The criterion of Rigour was not felt to be useful to the assessment and it was suggested that this could be left out, or revised to capture Reflection on the impact of policies on research culture.

Quality Descriptors

- The quality descriptors for assessment should seek to capture the quality of research environment and culture, and the support for people, which provides the foundation for the generation of high-quality research output and impact.

- The most helpful approach was that the descriptors should refer to evidence that a policy was in place, evidence that the policy was being followed, evidence that the policy was having an impact, and evidence that the policy was influencing the sector.

- Influence on the broader sector was problematic for some types of institution and this should not be a requirement for 4* quality.

Indicators

- For the pilot examples of quantitative, and qualitative evidence and contextual information were suggested, but HEIs were encouraged to provide any evidence they felt appropriate to represent their performance in PCE.

- Indicators were largely with the correct enabler, though there were overlaps.

- Assessment panels managed the assessment with the evidence available, and were able to reach robust judgements on the quality of the submissions.

- There was some feeling that clear guidance on where an indicator was being used was necessary, though also a feeling that flexibility would be important to support the diversity of HEIs, institutional strategies and missions within the sector.

- The aim of the pilot was to test a wide range of potential indicators of positive research culture with the intention of focussing on a tighter set for the full-scale assessment.

- Careful consideration was given to the rationalisation of the list of indicators and evidence. Across the panels there was often differing opinions about indicators. A list of indicators is provided where there was reasonable agreement that they may be useful for assessment in REF 2029. Indicators which are not suggested for consideration in the assessment framework for REF 2029 are still deemed important, they should not be discounted, and due consideration should be given to how they may be developed for inclusion in future assessments.

Submissions Template

- The template and guidance in the pilot were deliberately open to allow flexibility in the approaches taken, however the template would benefit from more structure and focussed questions.

- The word limits tested in the pilot (of 1,000 words per enabler) were generally felt to be too restrictive, especially for institution-level statements. Both increased word limits and a focussed list of indicators would be required for indicators to be covered in sufficient depth. The most frequently suggested word limit was 1,500-2000.

Results of the Assessment

- Assessment of the submissions was conducted by the assessment panels which evaluated each of the submissions assessing against the draft criteria. Panels arrived at scores for each of the enabling factors in the assessment framework (Strategy, Responsibility, Connectivity, Inclusivity and Development).

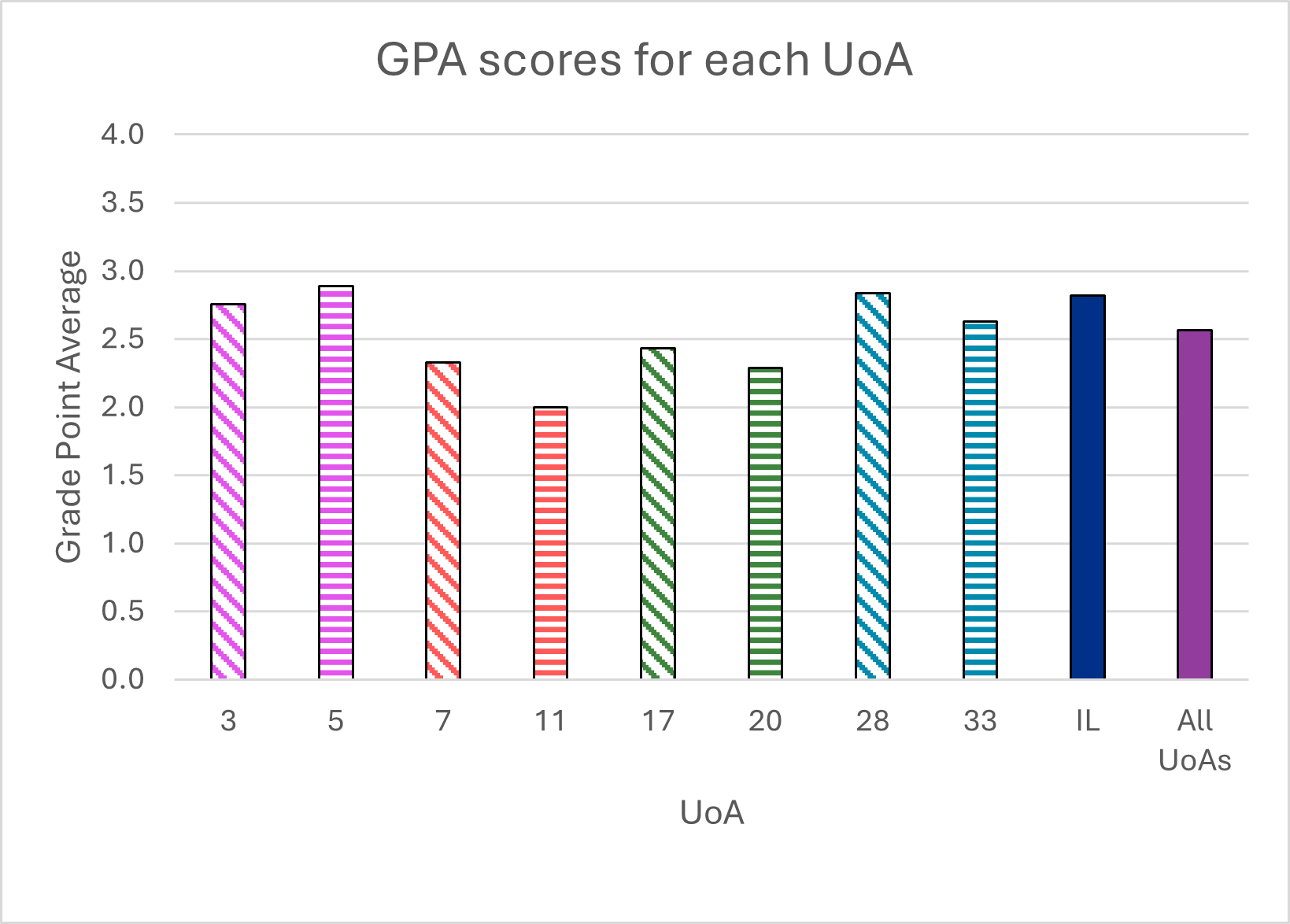

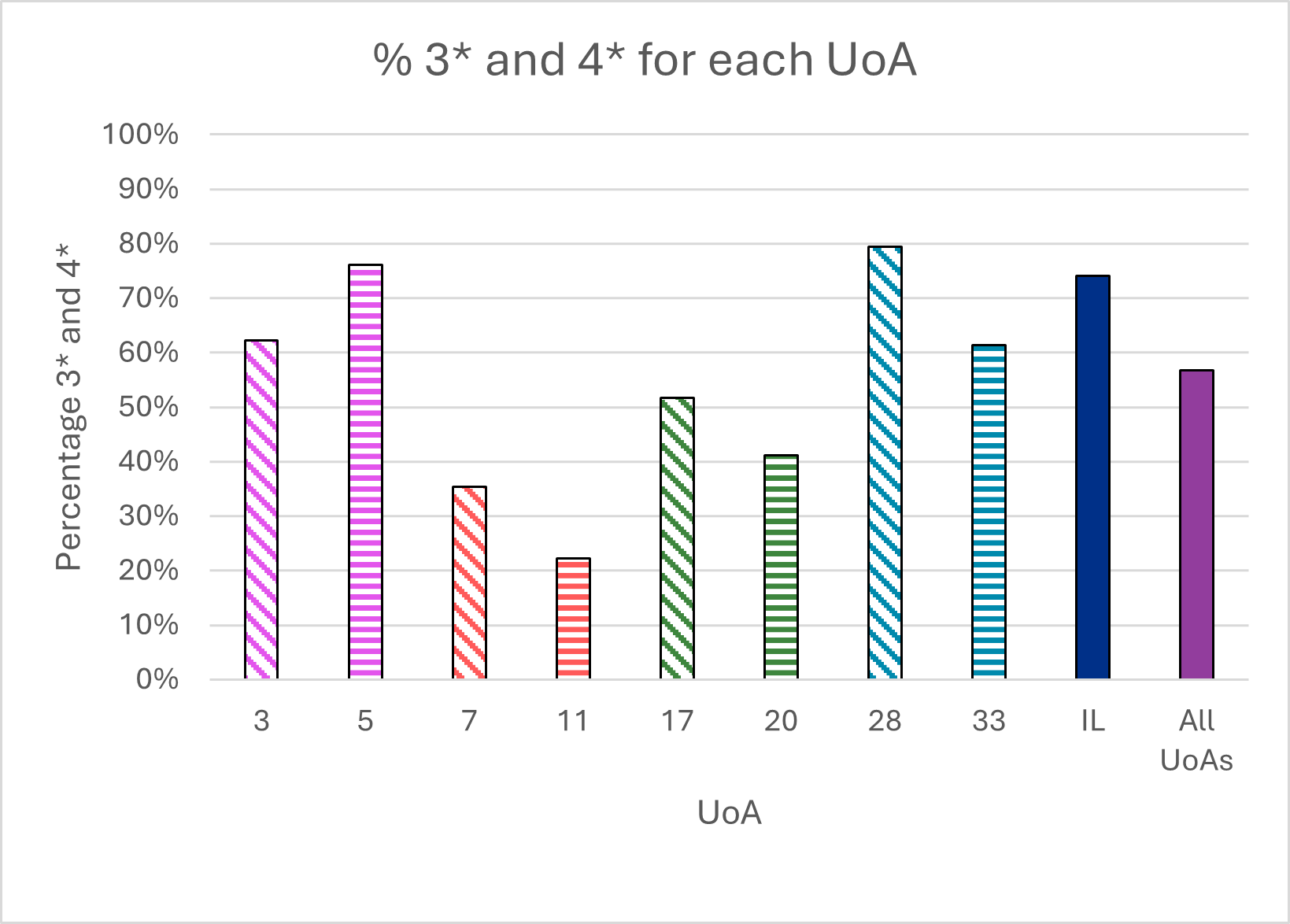

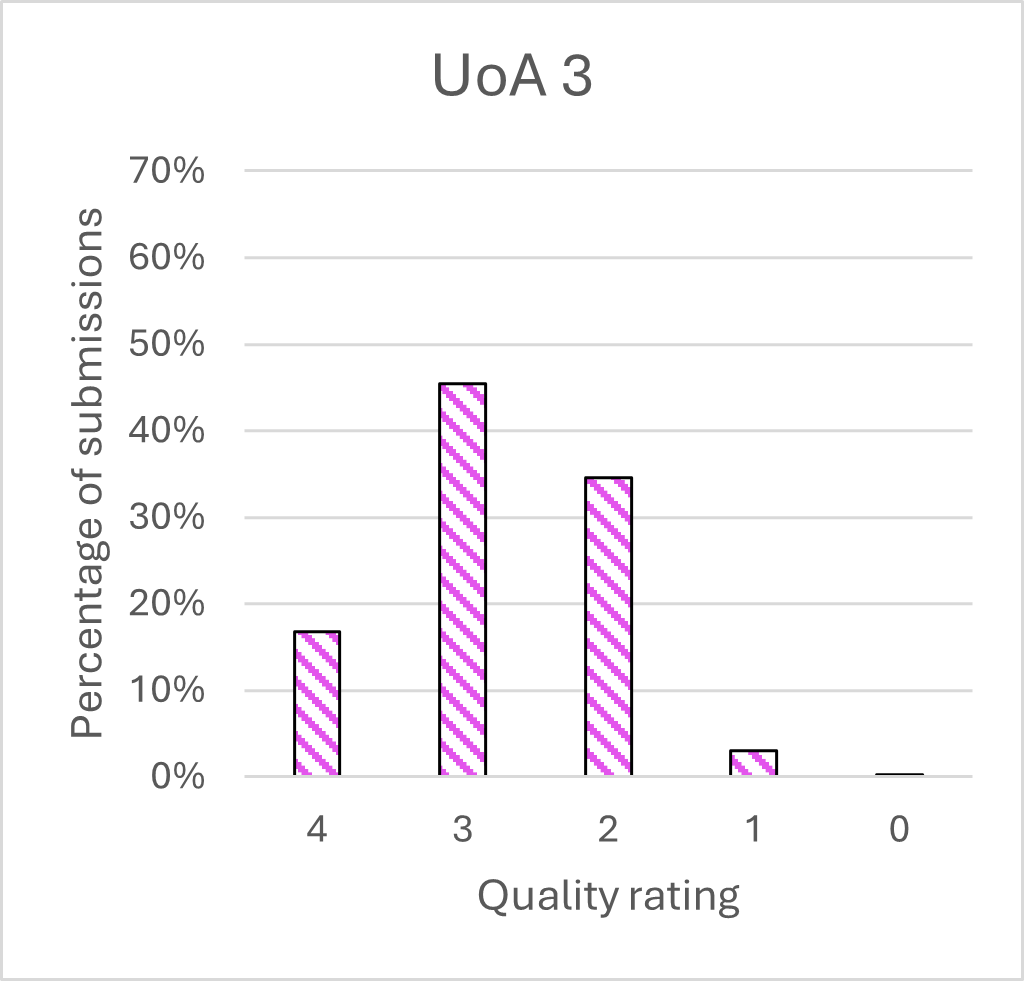

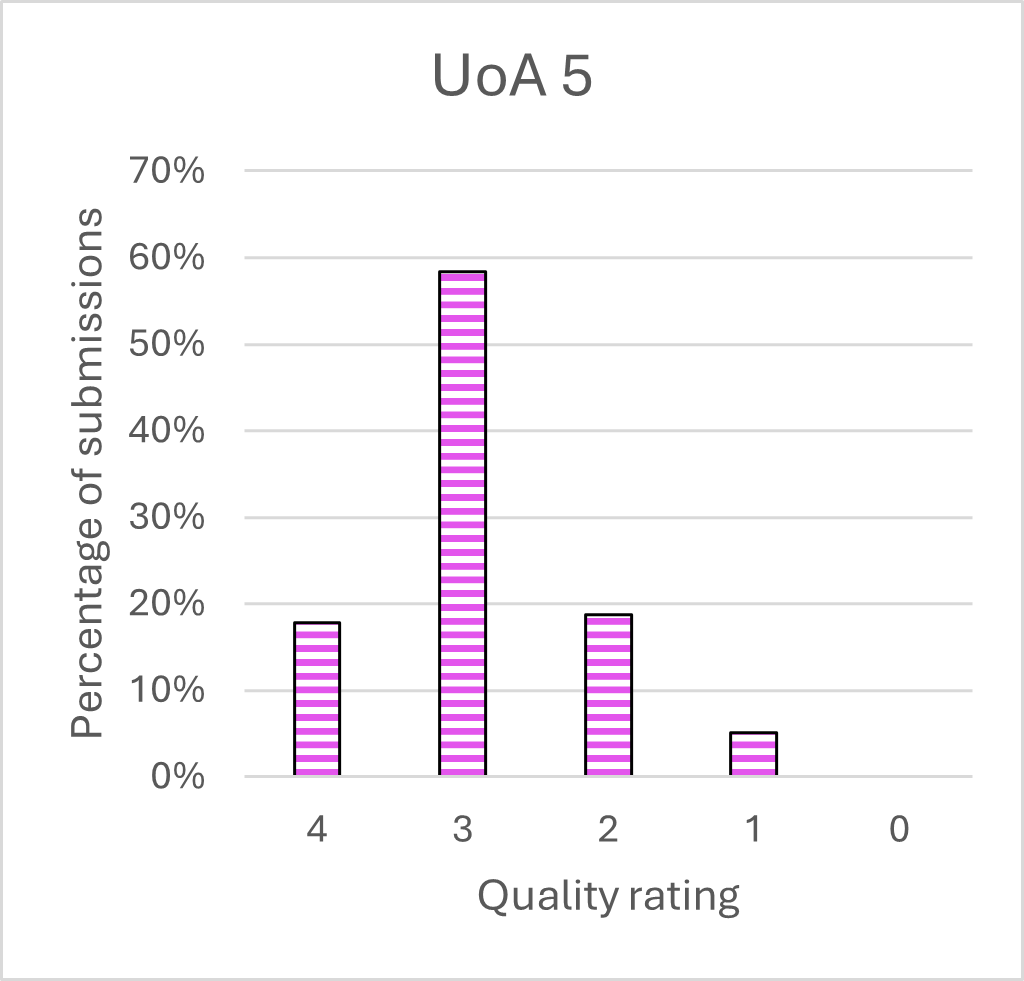

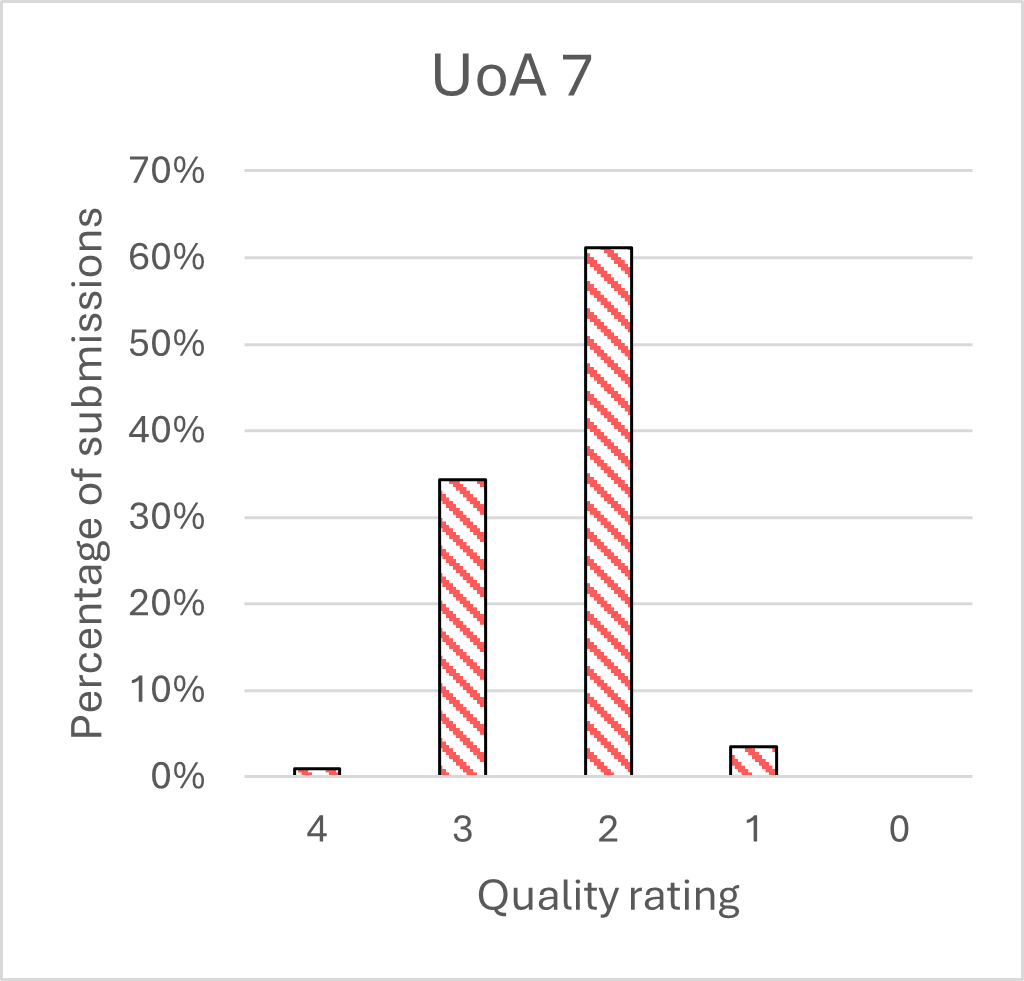

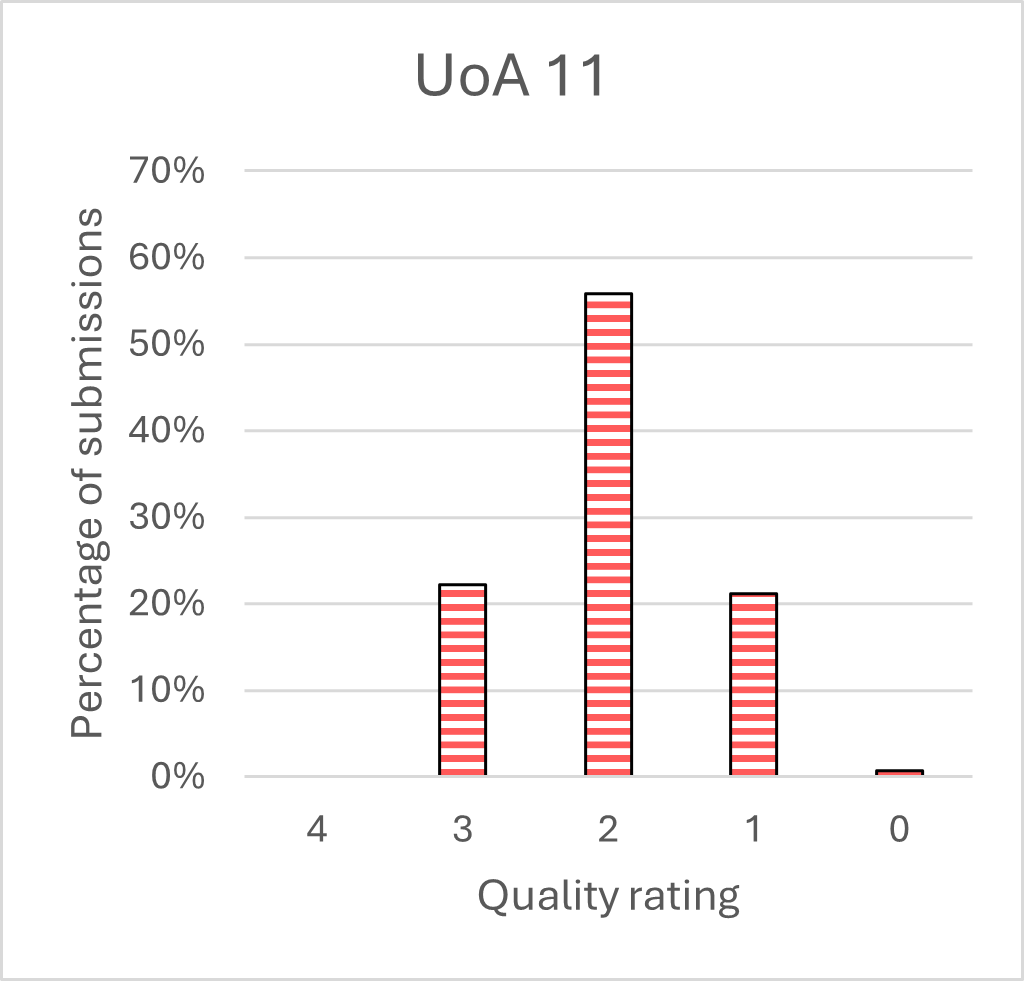

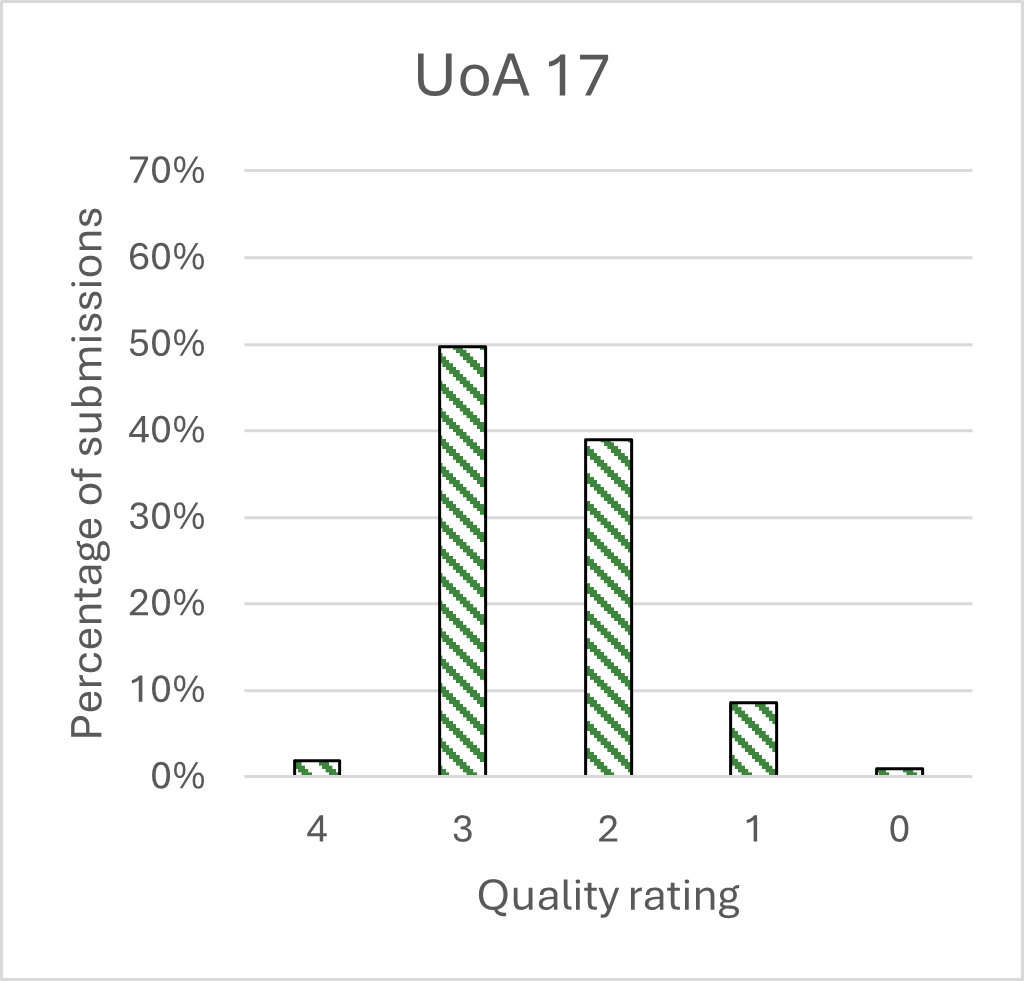

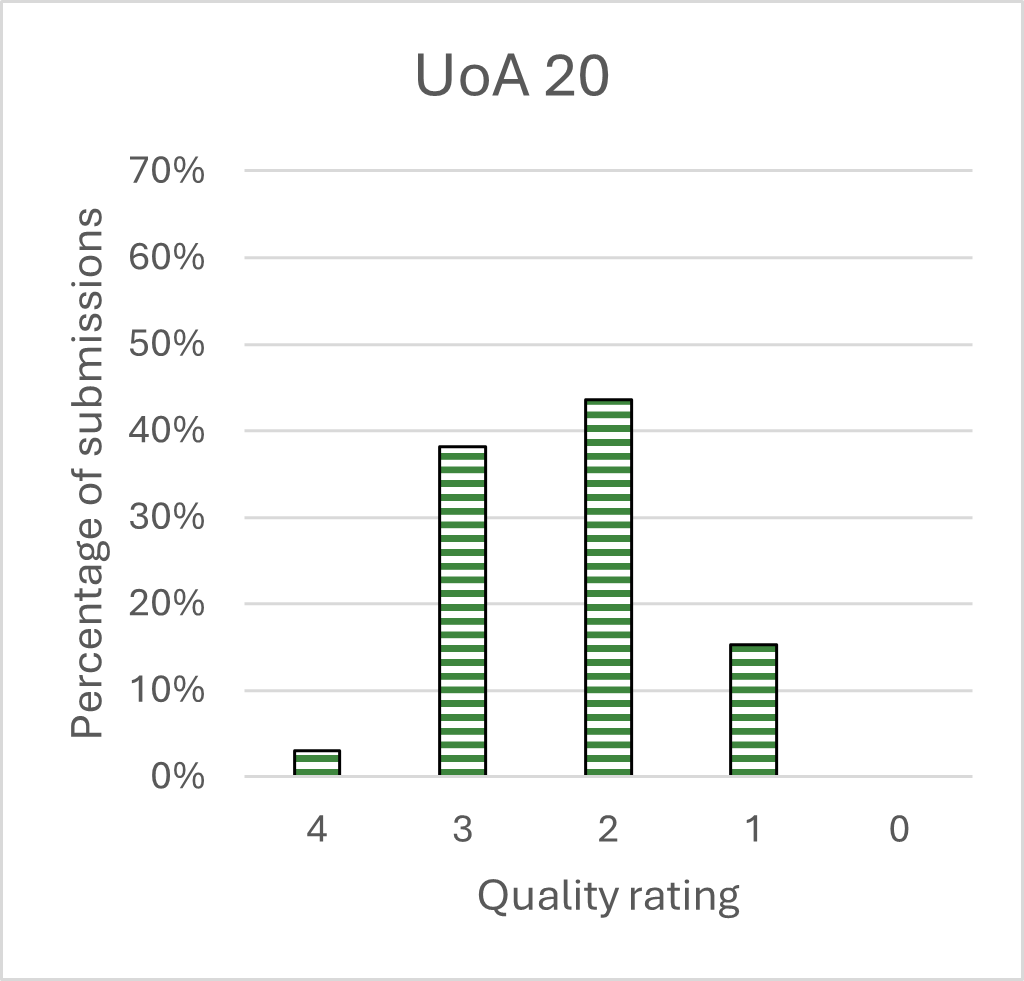

- No formal calibration was conducted within the assessment panels and HEIs took a wide variety of approaches in their submissions. Therefore, direct comparisons of one institution to another, or of one UoA to another would not be robust. The analysis provides summary statistics to give overall indications of performance without making direct comparisons.

- The assessment of PCE in REF 2029 will be different from the assessment conducted in the pilot. The Framework, Assessment Criteria, and Quality Descriptors are all expected to be refined for the full-scale exercise. Therefore, results of the pilot should not be interpreted as an indication of expected performance in REF 2029.

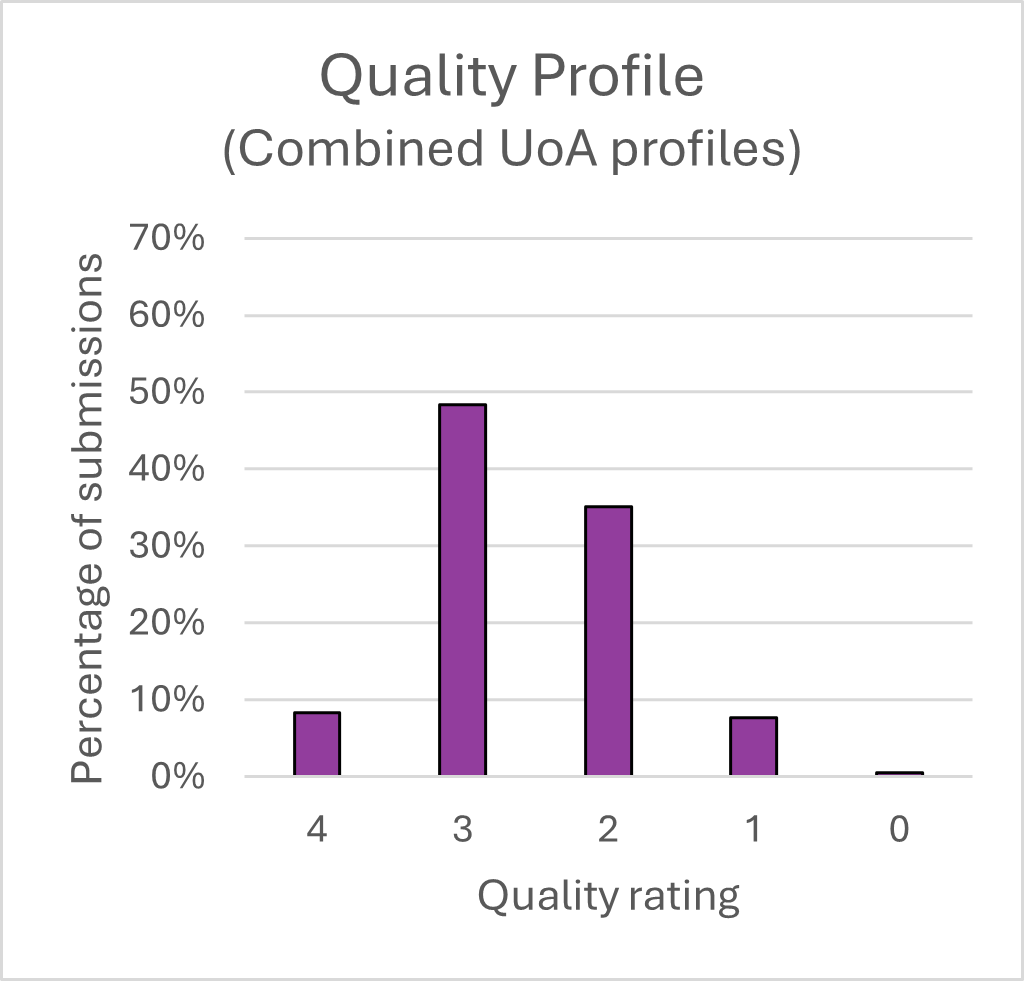

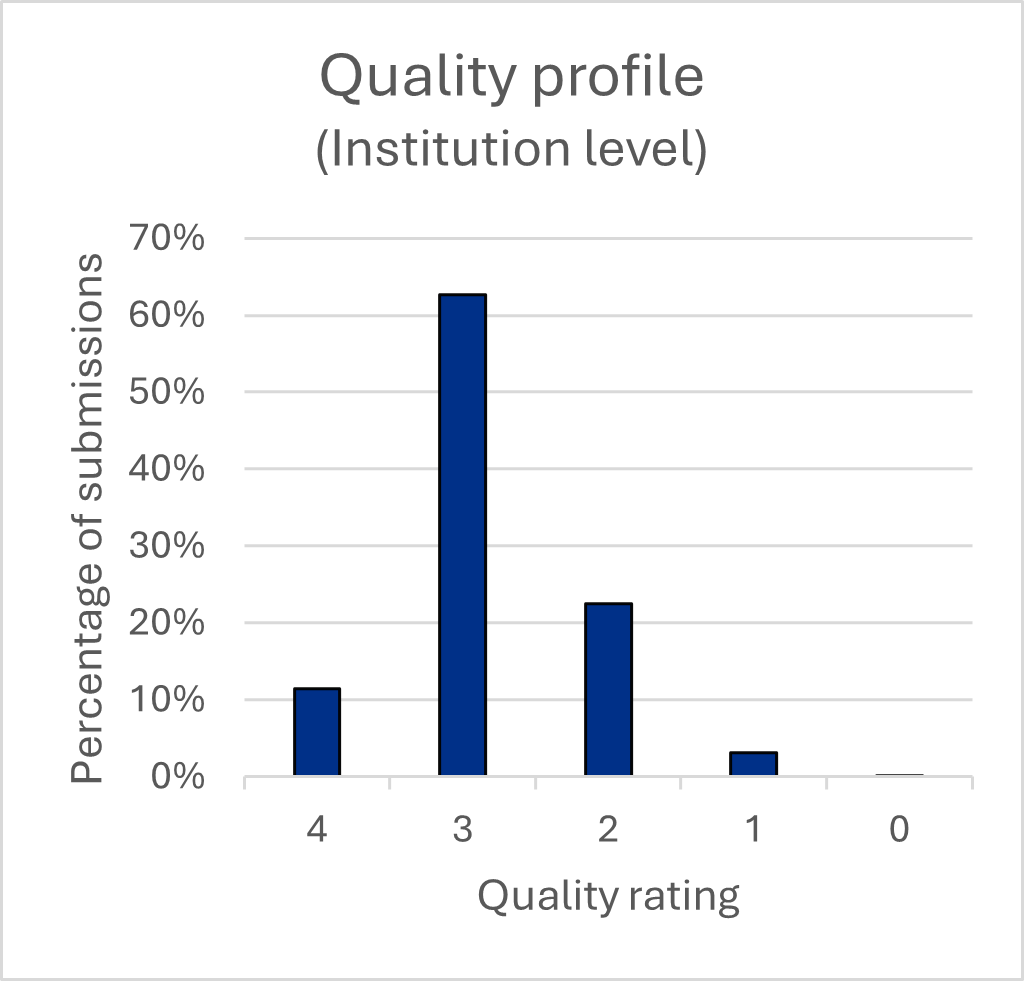

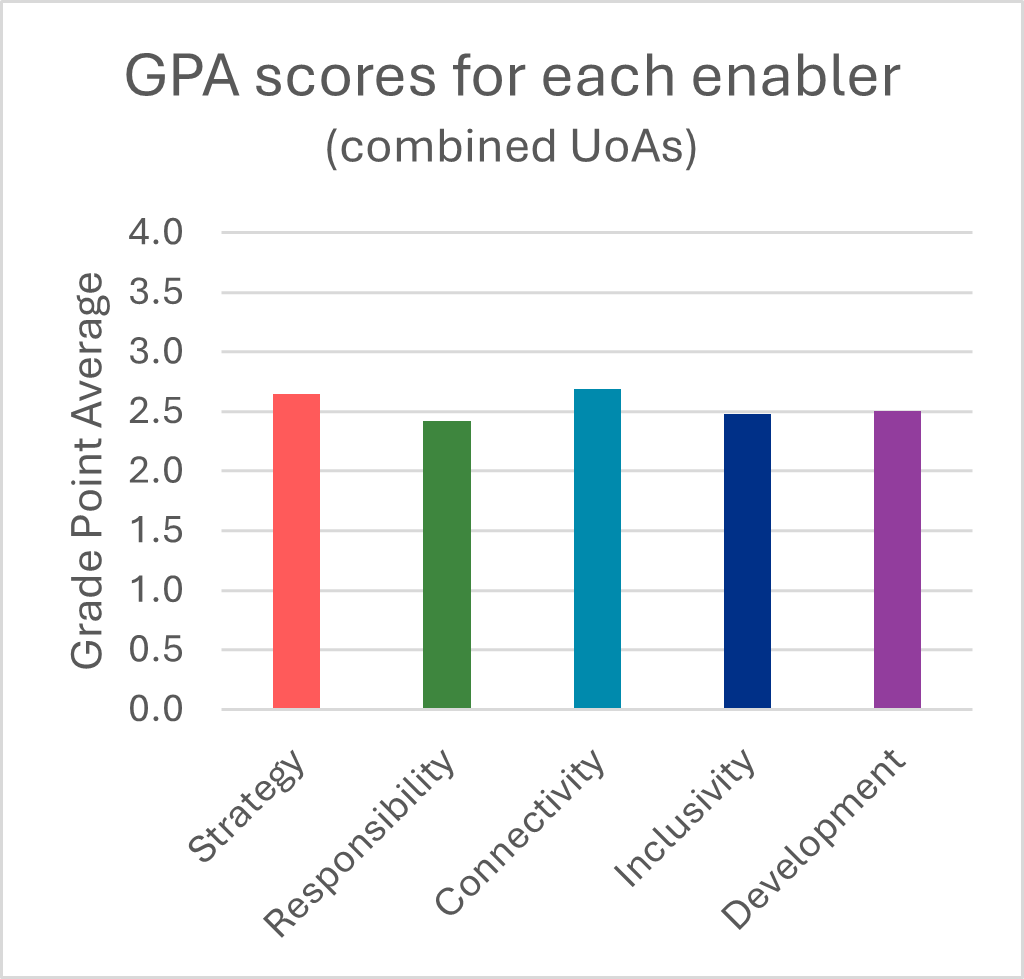

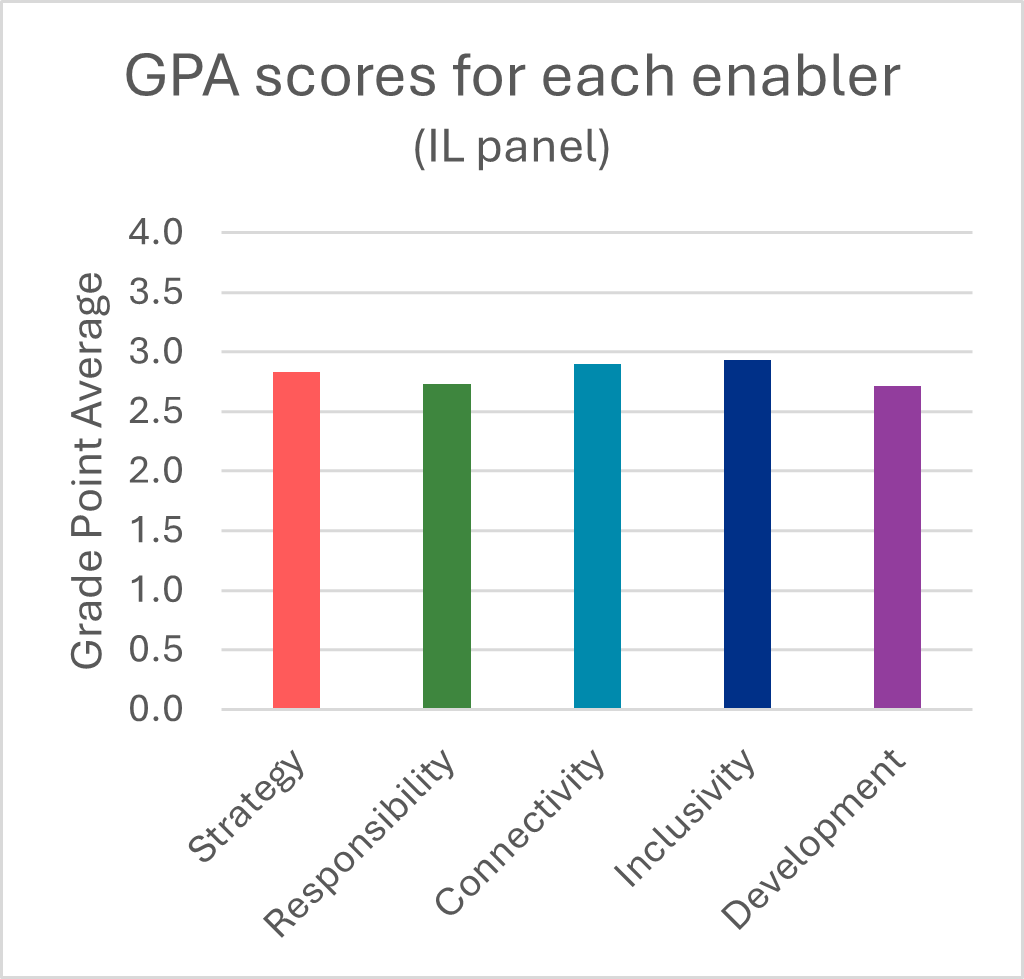

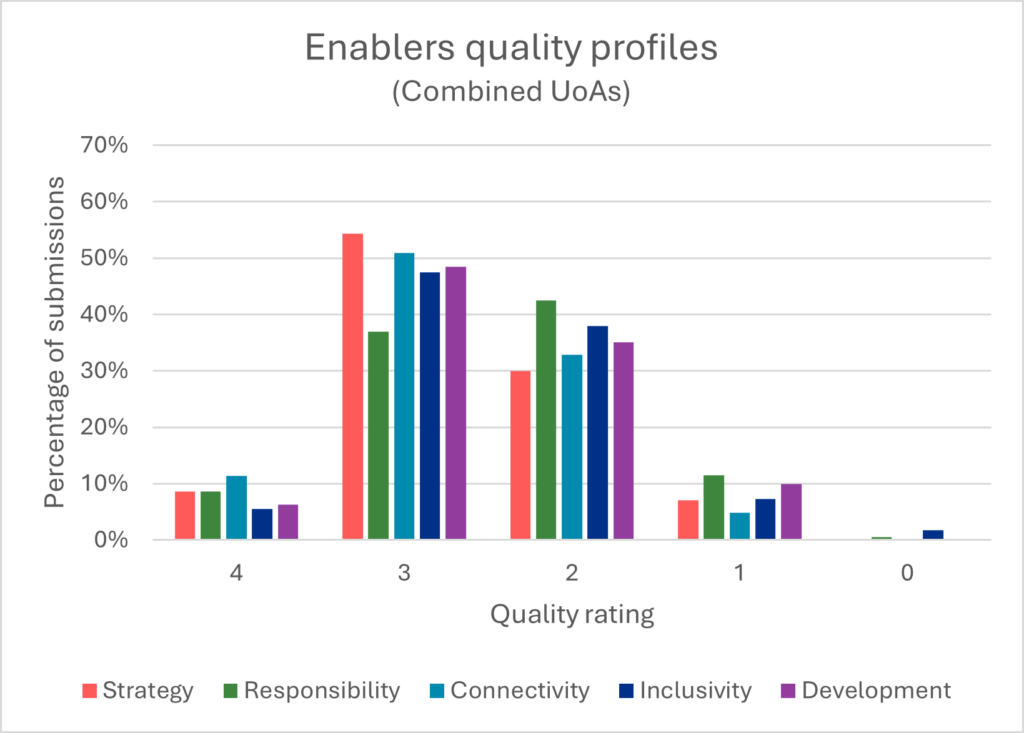

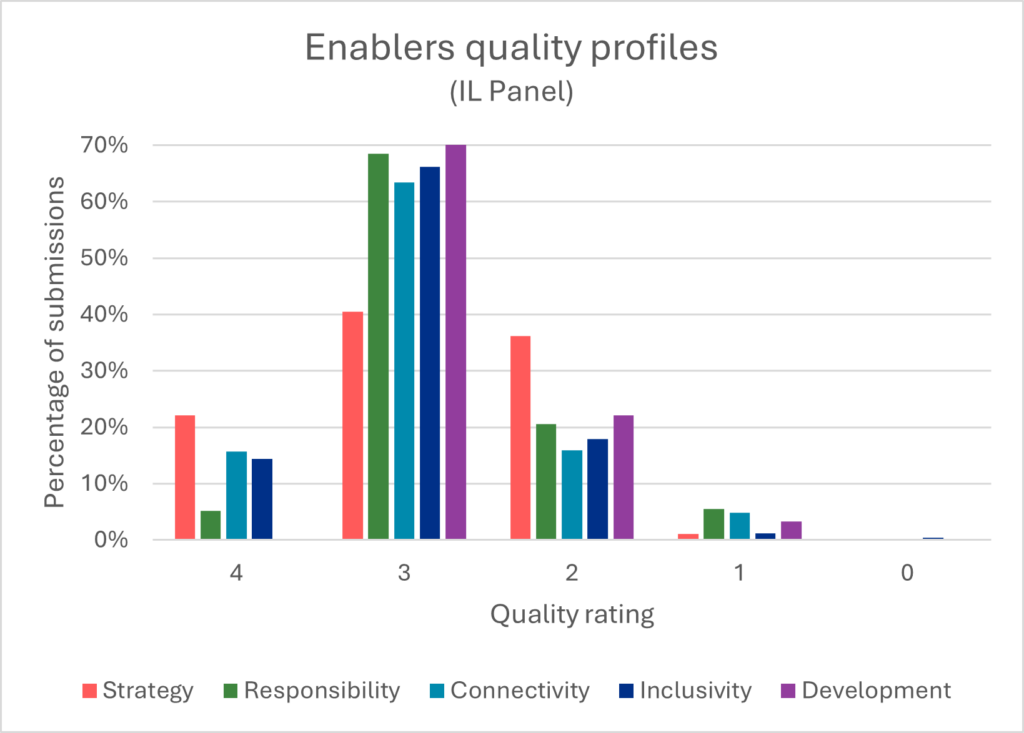

- Overall, scores across the pilot were lower than those for Environment assessment in REF 2021; this reflects the exploratory nature of the submissions and assessment processes being evaluated in the pilot.

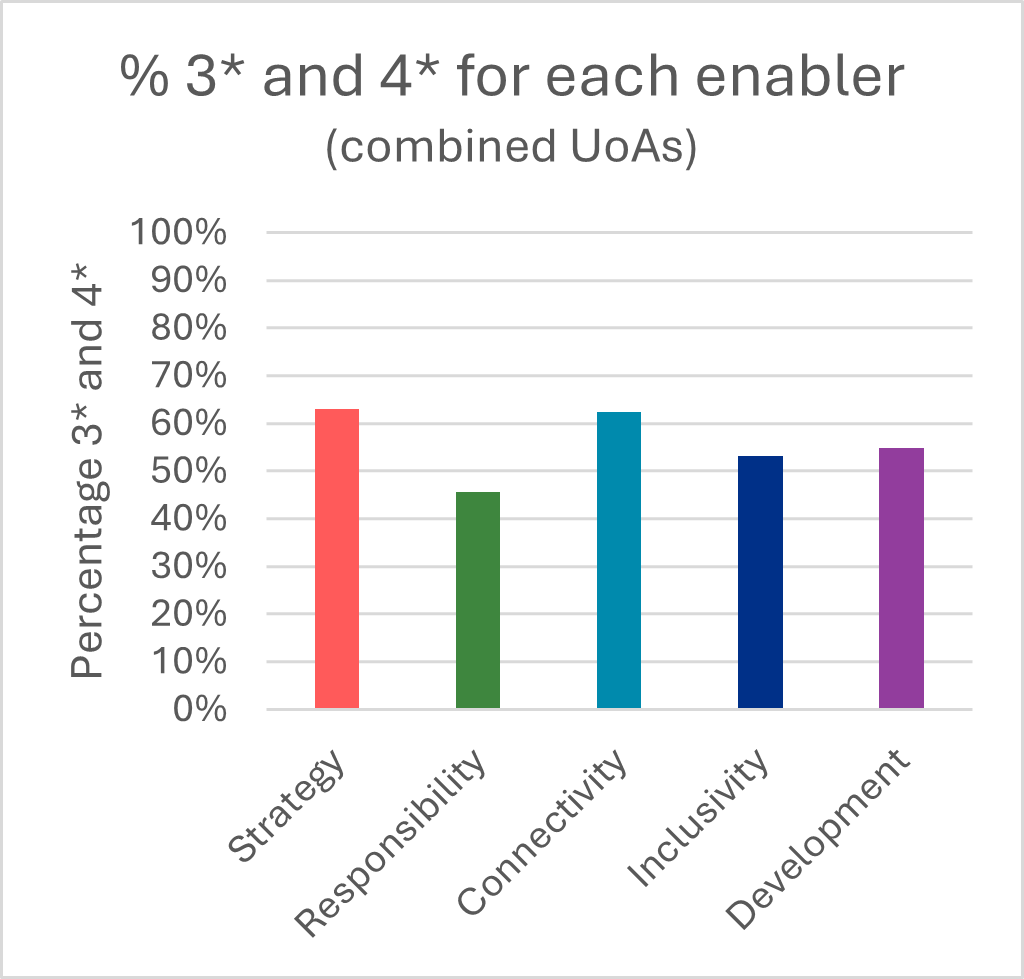

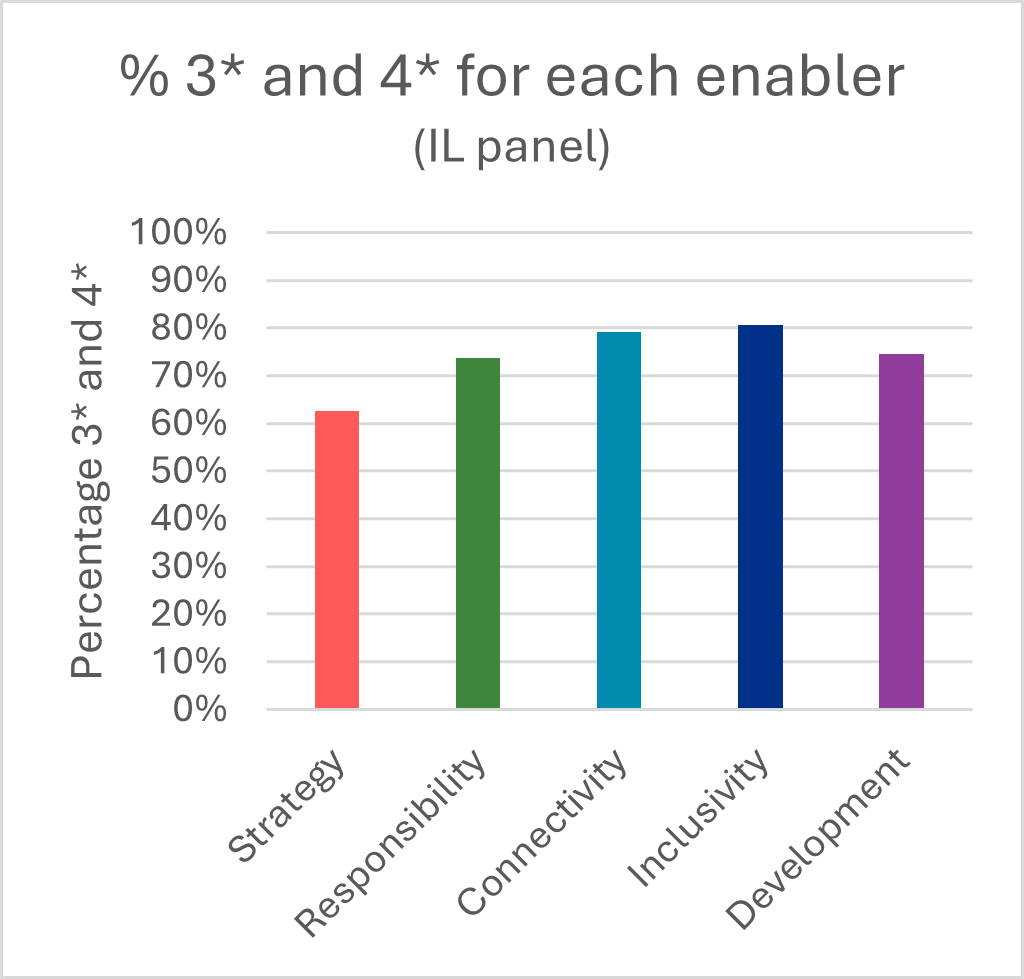

- There appears to be reasonable consistency between the different enablers with no particular enabler scoring significantly higher or lower than the others.

- There is some variation between the profiles for the different UoAs. Variation in scoring could be due to different approaches to the assessment between the assessment panels so should not be considered as differences in the quality of PCE approaches between different subject areas. Institution-level assessment was generally above the mean for the pilot, though this is likely due to better availability of evidence and data at institution-level.

- There is a clear trend with larger institutions scoring higher on average than smaller institutions. This trend is apparent despite the panels’ efforts to be as inclusive as possible in their approach to the assessment. Often evidence was lacking for smaller institutions and the panels were less confident in their scores, however difference could be attributed to different approaches taken by the participating HEIs and should not be interpreted as differences in the quality of the PCE operating at smaller institutions.

Participating HEIs gave feedback on their experience of preparing submissions through a set of structured questions, though they were given scope to provide additional feedback which did not fit the direct questions. Assessment panels also provided their feedback reporting directly to the REF team giving their reflections on the assessment processes after each round of meetings, and producing informal feedback reports at the conclusion of the exercise. In general, the feedback from the assessment panels was in close alignment with the feedback from the participating institutions. Feedback is presented below from both of these key stakeholders in the pilot.

Framework

The majority of participating institutions and the assessment panels felt that the piloted enablers were broadly clear and appropriate, though there were overlaps and there is potential to combine them, and that a simplification of the framework would be welcome. There was some discussion around whether a single statement with some flexibility to cover the different enablers might be simplest, but the balance of opinion suggested that a more structured template would be easier to complete (and may lend itself to a more efficient assessment). In addition, participating institutions suggested that clearer descriptions of the enablers are needed. These were very broad in the pilot, and it was not always clear what the ‘exam question’ was.

More broadly, careful consideration will need to be given to how the PCE elements and the Environment elements from previous REF exercises are integrated together. The Environment elements (such as income, infrastructure and facilities) were not explicitly assessed in the pilot, but it is anticipated that they will remain an important part of the assessment.

Feedback on each of the enabling factors is summarised below.

Feedback on enabling factors

- Context

Many institutions chose to include environment data as in REF 2021 (such as income and infrastructure data) for context, recognising that Environment was not to be explicitly assessed in the pilot. There was a clear understanding that this would be expected to be part of the assessment in REF 2029. However, it was felt that ‘Environment assessment’ should be broader than what was done in REF 2021 and should incorporate more than just income and infrastructure data. PCE should assess what is being done with the resources available; it should be about delivering the best possible outcomes with the resources available, not about rewarding institutions for attracting income; having more resources should not score highly if those resources are not being put to good use. - Strategy

Strategy was seen to hold a distinct role in framing institutional direction. Many institutions don’t have an explicit research culture strategy. With PCE still at the pilot stage within the REF it is unreasonable to expect institutions to have well-developed PCE strategies, but institutions should be demonstrating a strategic approach to developing or maintaining a positive research culture. There should not be a requirement to produce PCE strategies (or worse REF PCE strategies) where this is not appropriate for the institution. Submissions need only articulate what is the strategic research intent (as in, part of the research strategy for context) and then what are the specific objectives for activities encompassed under PCE. In many cases this could be highlighting parts of the research strategy relevant to the PCE assessment. Often strategy was seen as operating only at institution-level, due to its overarching nature and alignment with institutional governance. However, there was also a need to reflect on localised strategic priorities at unit-level. In some cases (dependant on the type of institution), there would be a unit-level strategy which could be distinct from the institution-level strategy. There may be bottom-up as well as top-down strategies and these need to be captured. However, the approach should be appropriate to the operating context of the institution, there should not be an expectation of a set of unit-level strategies generated just for REF. - Responsibility

Responsibility was felt to be mostly about compliance, and often only applicable at institution-level (for example, carbon emissions data). There was also a feeling that the indicators did not entirely address transparency and accountability, and specific feedback from institutions making submissions to arts and humanities was that traditional research integrity frameworks did not apply in all disciplines. - Connectivity

The Connectivity enabler covers a lot of indicators. Frequently it was described as too broad, with overlap with Engagement and Impact. There was a strong desire to refocus or split the enabler into clearer components. For example, there were suggestions that open research should be part of the Responsibility enabler. Clearer definitions of inter- and cross-disciplinary research are needed for this enabler. Collaboration was an important part of this enabler, however some small and specialist institutions felt that demonstrating this at scale would be challenging. - Inclusivity

Inclusivity was acknowledged as important, and it was key to include diversity of both people and research. However, Inclusivity was difficult to evidence at UoA level due to data suppression and small sample sizes. This was especially cited as an issue by small and specialist institutions where EDI concerns were particularly prevalent. Clear definitions of research enabling staff are needed here. Because of the issues with data, often Inclusivity was

evidenced by compliance with charters or concordats, which the panels found to be of limited use. - Development

Development was another broad enabler. Though this was seen as necessary and, for example, there was support for the inclusion of wellbeing, career porosity, and support mechanisms within this enabler. The focus of this enabler should be on people and therefore (as with other enablers) clear definitions of the people relevant to the assessment of PCE should be provided; for example, the term ‘research community’ was used to encompass the broader contributions to research, including research enabling staff.

Unit-level and institution-level assessment

There is a need to strike a balance between unit-level and institution-level assessment. And some enablers may lend themselves more easily to institution-level or unit-level assessment. Key here is being able to support sector diversity; there is a wide range of approaches, strategies and missions in the sector, and the assessment needs to be designed such that all of these approaches can be equitably assessed.

Unit-level and institution-level assessment are both necessary as a way of disentangling the differences between support at the different levels. If there are differences in performance between units within an institution, or if the performance of the unit is out-of-step with that of the institution, then this approach allows us to surface these issues and understand the reasons for the differential performance.

There is a need to integrate the assessment at unit- and institution-level. Statements at each level should be addressing different things and the guidance needs to be clear on this. At institution-level the statement should clearly articulate the policies and the strategic need for them; at unit-level, the statement should then explain how these policies are delivered and the impacts of those policies. However, there needs to be space to accommodate bottom-up strategies (meaning those developed within departments) as well as top-down. Not all policies are dictated to the unit by the institution.

Context and journey travelled

Assessment panels were clear that context was critical to the assessment of PCE. It is important to recognise that not all HEIs are operating with the same resources, and they are at different stages in their ‘PCE journeys’, this needs to be recognised and accounted for in the assessment. The highest scores should be achievable by any HEI working efficiently in focussed areas which have been targeted for strategic reasons. Panels felt that the distance travelled from their starting point (where institutions have shown an improvement) should be rewarded. However, journey travelled should not imply a need for constant growth and/or constant improvement of research culture when, in an environment of constrained resource, maintaining performance could be considered a success (such as doing the same with less, or maintaining an already excellent performance). However, there should be some reflection; if we expect institutions and units to be sustainable and resilient then they will need to reflect and adapt their approaches.

Participating HEIs agreed that context needs to be more clearly articulated and should be utilised in the scored elements of the assessment (in the pilot this section often incorporated some of the Environment data as used in previous REF exercises). There is tension here because HEIs are outlining their operating context and are being asked to reflect on any learning gathered and distance travelled; there was concern that this could introduce biases into the assessment process with the danger that bigger would be interpreted as better.

Simplification

There were various suggestions from the panels on how the enablers might be combined, or the framework might be simplified. One potential idea was that Responsibility and Connectivity could be related. There was debate about whether open research belongs in Connectivity, because it is about making connections and working with others, or in Responsibility, because Open Access is a responsible way to make research outputs available. Combining these enablers would side-step this debate. More generally working in a connected way could be seen as a responsible approach to research. Combining these two could remove the need to decide where exactly evidence should be submitted and could streamline the framework. Stronger references to environmental impact and social justice were also included here.

Similarly, Inclusivity and Development had some overlap because they incorporated the inclusion of all staff, and the supporting and developing of staff is part of ensuring they are included and engaged in the research culture. Again, combining these two enablers could streamline the framework.

Strategy remains an overarching theme, though consideration should be given to integration into the other enablers. One key aspect which could be strengthened was the necessary reflection that HEIs should be undertaking to refine and develop their ongoing strategy. The Context section was felt to be pertinent to the Strategy; HEIs are working with different resources and operating contexts, and this is important when considering strategy. Strategy should be seen as how an HEI is responding to the context in which it is operating.

Many of the participating HEIs provided the Environment elements from previous REF exercises in the Context section (for example, data and narrative on research doctoral degrees awarded, research income, and income in kind), the panels found this approach helpful, and suggested that these elements should be incorporated into the assessment of PCE.

These three combined enablers could be described as Environment, Culture and People as outlined below.

Possible combination of enabling factors

- Environment – Strategy in Context

Having robust, effective and meaningful plans to manage and enhance the vitality of the research culture and environment, with due account of the available resources and operating context. Mechanisms for reflection are in place to ensure the sustainability of the research culture. - Culture – Responsibility and Connectivity

Upholding the highest standards of research integrity and ethics, enabling transparency and accountability in all aspects of research. Working with a view to impacts on the environment and on social justice. Enabling collaboration at all levels through open research and engagement of research users and society, and through inter-disciplinary and cross-disciplinary approaches both within and between institutions. - People – Inclusivity and Development

Ensuring the research environment is accessible, inclusive, and collegial. Enabling equity for under-represented and under-recognised groups. Recognising and valuing the breadth of activities, practices and roles involved in research, building and sustaining relevant and accessible career pathways for all staff and research students, providing effective support and people-centred line management and supervision, supporting porosity and embedding professional and career development at all levels and across all roles.

However, there was some feeling that there were advantages to keeping the more granular framework; research culture was seen as being broader than just Responsibility and Connectivity and similarly Development and Inclusivity did not entirely capture the important elements to be addressed in supporting the people enabling and delivering research. A more granular framework would also allow a more nuanced approach to the assessment.

In addition, the panels agreed that consideration should be given to how PCE aligned with other elements of the REF exercise (CKU and E&I). For example, some aspects of Connectivity could find their home in E&I. However, there is a counterargument that PCE could draw in some of the aspects of CKU and E&I to produce a more streamlined framework overall.

There was a general need for clarity of definitions and some suggested definitions were:

- People

Staff who undertake research, including PGRs, and those in research-enabling roles, and how they are recruited, developed and offered career development support and opportunities, and how these are taken up and shape the environment. ‘Research-enabling staff’ refers to those in Professional Services roles, such as (but not exclusively) Technicians, Project Managers, Researcher Developer leads, those supporting bid development and colleagues in Libraries and IT Services who facilitate research activities. - Research culture

Behaviours, values, expectations, attitudes of our research communities, the strategies that support them and the means by which they are realised. - Environment

The intellectual and organisational environment within which research is conducted, and the income, infrastructure and facilities associated with this.

Assessment Criteria

The assessment criteria of Vitality, Rigour and Sustainability were considered as starting points in the pilot assessment, the criteria were expected to be developed as the assessment progressed.

Participating HEIs and assessment panels agreed that Assessment Criteria should take account of operating context and journey travelled. It is important to recognise that not all institutions are operating with the same resources, or working to the same institutional mission or strategy, and PCE should recognise where institutions are doing a good job with available resources, or working on delivering a positive research culture in focussed areas of their portfolio. The process of reflection and further planning is important, and this could be better reflected in the assessment criteria and in the quality descriptors, though not necessarily through the introduction of the additional criterion of Rigour. Generally, the participating HEIs and assessment panels recommended that the assessment should retain the criteria of Vitality and Sustainability. Feedback on the assessment criteria is summarised below.

Feedback on assessment criteria

- Vitality

Vitality was one of the criteria used for assessment of environment in REF 2021. It was generally felt to apply well to the assessment of PCE, and was well understood by the participating HEIs and assessment panels. However, Vitality requires clarity in language, for example ‘thriving’ could be interpreted as vague and subjective. In addition, Post-92 and small and specialist institutions emphasised the need to reflect localised progress rather than sector-wide influence. - Sustainability

Sustainability was one of the criteria used for assessment of environment in REF 2021. It was generally felt to apply well to the assessment of PCE, and was well understood by the participating HEIs and assessment panels. However, concerns were raised about articulation of Sustainability in the current climate. Financial sustainability could be working well with less resource, for example ensuring that the research environment remains resilient in a contracting sector. This criterion was seen as important but there were concerns around whether it includes future planning, resilience and adaptability. Generally, sustainability was seen to be demonstrated at a strategic or institution-level. Smaller institutions and Post-92 institutions noted that sustainability is often tied to institutional investment, research intensive institutions focussed on the need to align sustainability with strategic planning and infrastructure. - Rigour

Rigour was introduced to understand how HEIs were reflecting on their practice and striving for continuous improvement. Rigour was seen as problematic because of the difficulty in measuring the robustness of the processes in place, this could be difficult for smaller data sets, and therefore for smaller institutions/units. Rigour was frequently described as ambiguous and overlapping with other criteria, making it confusing. HEIs asked for clearer definitions like deleting or refining ‘meaningful’ in the phrase ‘meaningful mechanisms and processes’. Smaller institutions were concerned about being penalised for not having formalised processes. HEIs making submissions to arts and humanities UoAs questioned how rigour applies outside of traditional scientific contexts.

The pilot panels and participating HEIs agreed that not all criteria applied across all of the enablers, or that all criteria were the same at unit-level and institution-level. For example, Vitality and Sustainability seem to align more naturally with the Strategy and Development enablers, while Rigour may have more relevance in the Responsibility or Connectivity enabler.

A revised set of criteria were proposed and discussed by the panels. In particular, it was suggested that the criterion of Rigour should be re-focussed as Reflection, described below.

Possible revision of assessment criteria

- Vitality

The extent to which the institution/unit fosters a thriving and inclusive research culture and empowers individuals to succeed and engage in the highest quality research outcomes. - Reflection

The extent to which the institution/unit reflects on and evaluates the impact of its policies on research culture, people and environment. This includes sharing of good practices and learning, embracing innovation and demonstrating a willingness to learn from experiences. - Sustainability

The extent to which the institution/unit ensures their research culture, people and environment remain healthy and build for the future. This should include sustained investment in people and in infrastructure, effective and responsible use of resources and the ability to adapt and remain resilient to evolving needs and challenges.

However, there was some feeling that Reflection could be covered in other areas of the assessment framework (such as the quality descriptors), and consideration should be given to whether this criterion could be dropped, leaving Vitality and Sustainability as in REF 2021.

Quality Descriptors

Feedback on the quality descriptors for assessment was that the focus on research culture only as a means of generating research (outputs) was not helpful for the assessment of PCE, the wording of ‘conducive to producing research…’ was felt to be problematic in this context. Instead, the assessment should seek to capture the quality of research environment and culture, and the support for people. This will provide the foundation for the generation of high-quality research output and impact, and these aspects will be measured by the other elements of the REF exercise. The quality descriptors could be revised to reflect this.

Both the participating HEIs and the assessment panels felt that the most helpful approach was that the descriptors should refer to evidence that a policy was in place, evidence that the policy was being followed, evidence that the policy was having an impact and evidence that the policy was influencing the sector. There was also general agreement that the requirement for an institution or unit to have influence on the broader sector was problematic and might restrict four-star performance for some types of institution (such as those with more of a teaching focus). Instead, the assessment panels considered a self-reflection and continuous improvement approach to be the requirement for four-star quality.

This could be reflected in a revised set of quality descriptors based on a maturity framework as outlined in Table 2.

| Rating | Descriptor | Full description |

|---|---|---|

| 0 | Identification | The need to develop a strategy or approach is recognised, but a clear course of action is not established, or evidence provided does not justify that an appropriate strategy is in place. |

| 1* | Intent/Initiative (policy and guidance) | A policy or approach is in place outlining strategy, priorities and indicators of positive research culture. |

| 2* | Implementation (engagement, training and support) | There is evidence that the approach is being followed and that there are mechanisms for evaluation. |

| 3* | Impact (action and delivery) | There is evidence that the approach has a positive impact on research culture and as a result people achieve better results, including examples. |

| 4* | Insight (monitoring and reflection) | There is evidence that the institution has reflected on the approach and has sustainable plans for the future strategy development. This could include sharing of good practice, and/or learning from experiences. |

These quality descriptors were seen as additive, in that institutions will need to be achieving the requirements for 1* before they can get 2* etc. reflection and monitoring are not sufficient for 4* quality if there is no evidence that the policy has been implemented.

There were some differing opinions on defining 4* research culture. It could be about exhibiting exemplary practice and continual improvement through both outreach (disseminating ideas to the wider sector) and in-reach (seeking and incorporating best practice from across the sector). There was some feeling that retaining influence as a criterion for 4* quality was useful (some panels saw this in submissions and felt it should be recognised) but that that influence should not be a requirement for 4* research and that it could be either internal or external influence.

Indicators

For the pilot the guidance was flexible suggesting examples of quantitative and qualitative evidence and contextual information, but allowing scope for participating HEIs to cite evidence in multiple sections of the template (as in, for multiple indicators across multiple enablers), and institutions were told not to feel constrained by the examples given and were encouraged to provide any additional evidence they felt better represented their performance in PCE.

In general, participating HEIs and assessment panels felt that indicators were largely with the correct enabler, though there were overlaps. Some HEIs wanted clear guidance on where an indicator was being used, others felt the overlaps were manageable. Similarly, some HEIs felt that the relationship between the indicator and the suggested sources of evidence was not always clear. Assessment panels managed the assessment with the evidence available and were able to look for and consider appropriate evidence in other sections of the template.

Flexibility and focus

There was general agreement that narrative was a critical part of the submissions, evidence should be seen as provided in support of the narrative, though there was acknowledgement that narrative without supporting evidence would not be conducive to a robust assessment. This approach would benefit from the flexibility of evidence sources which was trialled in the pilot.

The assessment panels felt that the volume and nature of the evidence provided in the pilot submissions was more than was required for a reasonable, yet robust assessment. One approach to consider would be a framework where HEIs are given scope to identify their own areas of focus within PCE, they should provide a rationale as to why they have chosen to focus on these areas and an explanation of how they are exhibiting excellent performance, or improving performance, in these areas. Overall, the PCE assessment should be about identifying and rewarding excellence as a way of highlighting and sharing good practice in the sector.

Some submissions included case studies, and this was generally appreciated by the panels. However, there was acknowledgement that this would increase the burden of preparing and assessing submissions. An approach which allows HEIs to focus on certain aspects of their research culture could allow the specific discussion of certain aspects of research culture without the requirement of case studies.

Mandatory data and evidence

Some high-level metrics or evidence sources are required in the assessment to facilitate comparison between submissions. These would need to be existing sources of data that were already being collected by HEIs across the sector, (by all types of institution) and ideally they should be collected centrally by the REF team (such as HESA data) and could include many of the data sources used for assessment of Environment in previous REF exercises. Meaningful benchmarks for these data sources should also be provided to facilitate assessment. Consideration should be given to differences in the mandatory data at Main Panel level.

Optional supporting evidence

There was concern that having optional metrics and evidence may not be interpreted as intended; submitting HEIs may feel that they will be disadvantaged if optional evidence is not provided. In order to mitigate the burden, lists of suggested additional evidence sources should be provided, and guidance should be clear that these additional sources were optional and should only be included where available and pertinent. HEIs may also choose to include additional evidence not listed in the guidance, where they felt it appropriate to represent their performance. In particular, consideration should be given to any required sources of evidence at unit-level, as there are particular issues with small institutions and small units and there was a significant burden in providing unit-level data, and potential issues with providing data which did not identify individuals.

Staff data

Staff data need to be clear. This is not just staff with responsibility for research, or staff which contribute to the volume measure. Ideally the assessment of PCE should encompass all staff who contribute to the research process generating new meaningful knowledge and understanding. However, in practice there may need to be some compromises struck allowing for what data are available. There was universal agreement that a clear definition of staff for PCE was required, and this should be a single PCE definition across all enablers and indicators.

Refinement of Indicators

The aim of the pilot was to test a wide range of potential indicators of positive research culture with the intention of focussing on a tighter set for the full-scale assessment. The participating HEIs were clear that the range of indicators in the pilot would be unmanageable for a full exercise. Any compulsory indicators should be carefully selected to ensure that they can be fairly applied across all types of institution. It was also clear that data were not always available to different institutions (for example, KEF data are only available to English institutions); in many cases collection of data placed significant burden on the institutions and/or would be impossible to collect retrospectively. We should also consider where data could be collected automatically to reduce burden, this would not be possible for many of the proposed indicators.

The participating HEIs had a wide variety of observations on the indicators.

- In many cases the relevant population for the indicator was unclear. The guidance will need to clearly define what is meant by people in the PCE assessment, and ideally this definition should align with broader REF definitions.

- Many of the indicators focussed on quantitative measures which might not be appropriate for the assessment and could shift the focus of the assessment onto performance rather than purpose. It would be better if indicators steered towards concrete examples of initiatives or policies and their impact rather than just recording data or providing evidence or documenting processes.

- Many of the indicators could not be evenly applied across the exercise. They might not be appropriate for all disciplines, or data may not be available for all types of institution. Careful consideration should be given to the broad applicability of indicators in a full-scale exercise.

- The descriptions of the indicators were open for the pilot and this flexibility was a necessary part of the process; there was a general feeling that descriptions will need to be more tightly worded for the full guidance, though some appreciated some flexibility in the assessment framework.

The assessment panels gave careful consideration to the rationalisation of the list of indicators and evidence with a view to reducing the list of piloted indicators to a much tighter framework for a full-scale REF exercise. Across the panels there was often differing opinions about indicators. In many cases some of the panels were supportive of an indicator, while others found it to be problematic. Similarly, in many cases the panels suggested substantial development or revision would be necessary for inclusion of an indicator. Finally, some indicators were considered important, but were not pertinent to the assessment and/or were captured by other existing processes (such as legal requirements or conditions of funding). Although these indicators are not recommended for inclusion in the assessment framework for REF 2029, they are considered important by pilot panels, they should not be discounted, and due consideration should be given to how they may be developed for inclusion in future exercises.

The lists of Indicators listed below are those where there was reasonable agreement between the panels that they were useful to the assessment and could be applied across a range of institutions and subjects/disciplines. These indicators are presented to the REF 2029 panels for consideration during the criteria setting phase of the exercise.

Indicators which were considered useful to the assessment and which could be broadly applied

- Context

How research is structured across the submitted unit (including research groups or sub-units), and how this relates to departmental or other administrative structures within the institution.

Operational and scholarly infrastructure supporting research within the submitting unit, including estate and facilities, advanced equipment, IT resources or significant archives and collections. - Strategy

Documented evidence of the strategy and strategic priorities, with coherent plans towards their achievement. May include: Key Performance Indicators, descriptions of any consultation or co-creation activities, reporting lines and accountability mechanisms, approaches for monitoring and evaluation.

Data on improvement as a result of strategic initiative(s). - Responsibility

Conformity of measures with the Concordat to Support Research Integrity, including through cross-references to annual reporting. Though compliance alone is not pertinent to the assessment, institutions should demonstrate where such policies have had an impact on their research culture and environment.

Documented evidence that membership of relevant committees or involvement in other relevant academic citizenship activities is appropriately recognised (for example, in workloads or promotion criteria).

Documented evidence of participation in relevant networks, events and initiatives leading to changes in policy and practice. - Connectivity

Documented evidence that the infrastructure, processes and mechanisms in place to support staff and research students to share research, knowledge and expertise are working effectively.

Support for the development of research networks, centres, groups and events (such as waiving room hire charges, communications).

Documented evidence of the quality and significance of research collaborations across different disciplines, institutions, or with external partners, organisations or communities.

The number and disciplinary spread of co-authored or co-produced research outputs and activities.

Documented steps towards open research that go beyond Open Access (for example, support for open/FAIR data).

The number and share of openly-accessible research outputs and activities (for example, open databases, public performances).

Number of times shared datasets are accessed or downloaded on openly-accessible platforms. - Inclusivity

Numbers of each of the following:

– Technical and research-supporting staff

– Newly employed early career researchers

– Staff employed on permanent, fixed-term and atypical contracts

Longitudinal data on research and research enabling staff:

– With no known disability, disability declared or unknown

– Declaring male, female, other or unknown

– Declaring as white, Black, Asian, other/mixed or unknown at institution-level.

Longitudinal data on average (mean and median) institutional gender, ethnicity and disability pay gap (for academic and research-enabling staff stratified by grade).

Documented evidence of implementation of principles and practices from responsible research assessment initiatives, such as those outlined in CoARA or DORA.

Documented changes to assessment processes, criteria and guidance, as informed by responsible research assessment principles.

Evidence of how equality and diversity issues are addressed, in relation to support for acquiring research funding, or accessing scholarly or operational infrastructure.

The development of targeted leadership programmes and demonstrate that under-represented groups are enabled to participate and benefit.

Activities to prevent harassment and bullying, including awareness-raising, training and the creation of safe spaces.

Monitoring and assessment of the effectiveness of mechanisms to safeguard and protect whistleblowers or victims of bullying and harassment, including resolution satisfaction. - Development